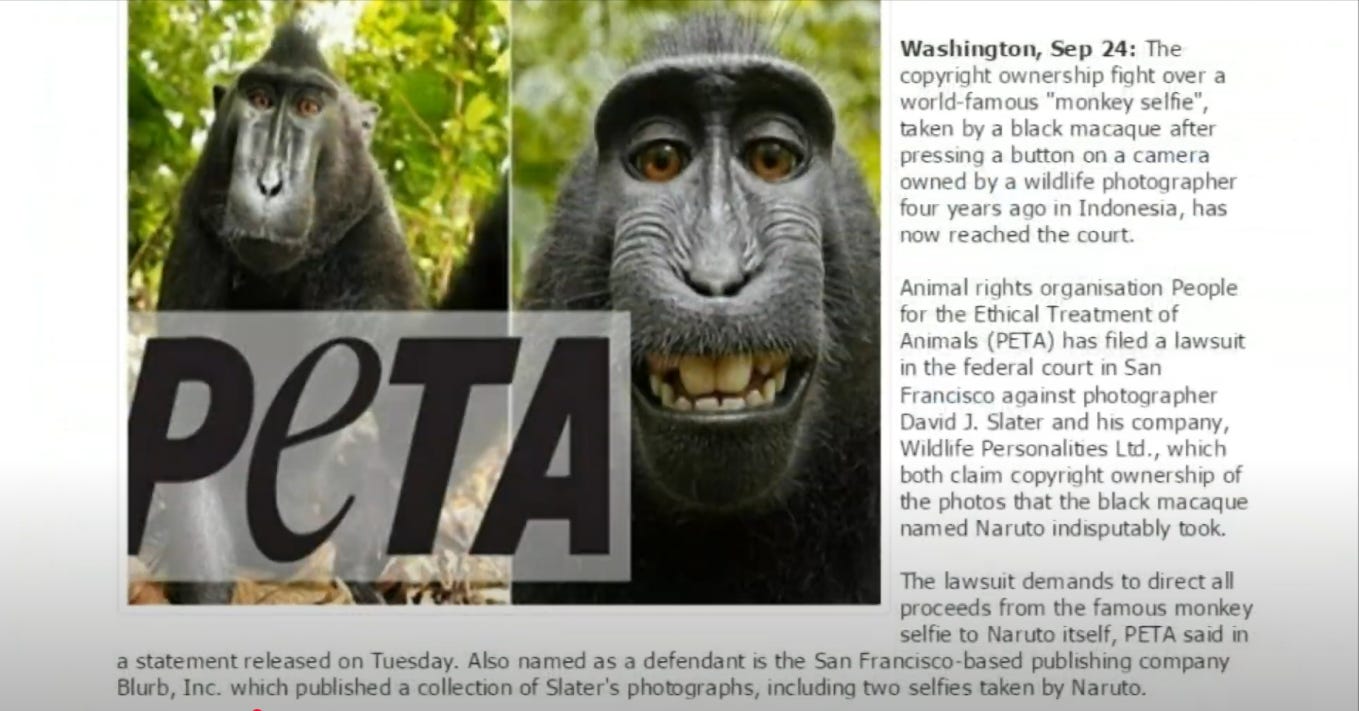

What is the Naruto monkey selfie case and why does it matter for AI?

A monkey takes a selfie. Years later, a federal judge must decide who owns it.

In the courtroom, the judge asks with a straight face whether Naruto would be required by law to provide written notice to other macaque monkeys before joining a lawsuit. The courtroom laughs.

Here’s what’s not funny: the initial ruling.

No human author, no rights. Period.

Right now, if you’re creating with AI, the legal system says the same thing to you.

Spend hours refining prompts, making hundreds of creative decisions, shaping output until it’s exactly right. Someone else can take it, use it, sell it. You get nothing. When you admit AI was involved, your work loses protection.

So you stay quiet. Many pretend we’re not using the tool reshaping creative work at a speed and volume no human can match (or maybe should).

Why are we treating human creativity with AI the same way we treat a monkey with a camera?

And what does the shame around admitting you use AI, from both sides, reveal about what’s broken?

This isn’t about whether AI deserves copyright. It’s about whether creators working with AI deserve protection for their work.

Right now, the answer is zero. Not 25%. Zero.

The monkey got 25%. You get shame, silence, and zero protection.

Let’s talk about why, and what that 25% reveals about creative rights with AI.

What happened in the Naruto v. Slater settlement?

Indonesia, 2011. Wildlife photographer David Slater sets up his camera in the jungle.

Naruto, a crested macaque, grabs it and starts clicking. Many photos. Most are blurry, random, kind of what you’d expect from a monkey with a camera.

But a few? Perfect. Composition, timing, expression. The kind of selfies humans spend ten tries to get right.

They go viral. Wikipedia posts them as public domain with a simple explanation: the monkey took the photo, not the photographer.

Slater objects. He set up the equipment. He created the conditions. He made the monkey photos possible.

Then PETA sues on Naruto’s behalf. Not because they think the monkey deserves rights, but to make a point about animal rights and who controls creative output.

The court doesn’t debate whether the photos are creative. They are. The court doesn’t question whether they have artistic merit. They do.

The question is simpler: Without human creative control, is there anything to protect?

The answer: No.

Not because the work lacks value. Because the law was built for human creators, and nobody knows what to do when creativity crosses species. And in our case, when it crosses into working with machines.

The case drags on for years. Slater’s exhausted. PETA wants a resolution. So they settle.

25% of future revenue from the photos goes to charities protecting crested macaques in Indonesia. Not because Naruto won. Because everyone wanted it to end.

Not full ownership. Not recognition as the creator. Just a cut.

The photographer keeps the rest, even though the monkey pressed the button. The monkey gets a percentage, even though the photographer created the conditions.

Maybe the answer to “who owns this?” isn’t either/or.

Maybe it’s not human OR monkey. Maybe it’s not human OR AI.

Maybe when different forms of intelligence work together, even by accident, what does fair look like?

Because right now, with AI, we’re not even asking that question. We’re just saying zero.

=

Should I admit to using AI in my creative work?

Reality, you probably shouldn’t if you’re even asking the question.

Not because using AI is wrong. Because admitting it sometimes costs.

You create something with AI. Spend hours refining prompts, making creative decisions, shaping output.

The Copyright Office’s position is clear: no human creative input that rises above AI’s contribution, no protection.

How much is too much AI? Nobody knows. Nobody will tell you. You won’t find out until someone challenges your work or there’s money involved.

Take Jason Allen’s Théâtre D’opéra Spatial. He ran 600 prompts through Midjourney, made hundreds of choices about composition and style, won a Colorado art competition. Then applied for copyright protection.

Denied. AI-generated, so no protection. The 600 prompts didn’t matter. The creative decisions didn’t count.

What’s a creator supposed to do?

You write an article. Use AI to help with research, maybe structure, some editing. Do you mention it? Do you check a box on YouTube saying you used AI?

Why would you? Admission means zero protection and convinces people the work isn’t really yours. Maybe it’s just scraped content from the internet, regurgitated.

So you stay quiet. Everyone stays quiet. And we pretend we’re not using the tool reshaping creative work at speed and volume no human can match. Or maybe should.

That’s the liar’s dividend. The reward for silence.

We don’t measure human-created work by what tools were used. We measure it by whether it’s original, inventive, new. Whether we like it.

Why is AI different?

Fear. The Scarlet AI. There’s this idea that admitting AI involvement means you’re not a “real” creator. That it diminishes the work. That you’ll lose protection, respect, everything.

Some people call creators using AI lazy or fake. Others like tech builders and AI engineers call creators greedy and entitled when they ask for permission, payment, and transparency about how their work trains these systems.

Both sides are shaming. Both sides are wrong.

And creators are caught in the middle, hiding their tools and their process because honesty is punished.

The conversation about what’s possible when different forms of intelligence work together never happens. We’re stuck in either/or thinking: Human or AI. Real or fake. Creative or automated.

What happens when different forms of intelligence learn to work together?

Right now, we’re too afraid to even ask.

Why don’t AI creators have copyright protection?

Because the law is asking the wrong question.

Courts keep asking: “Is it human enough?”

When they should be asking:

“Is it creative? Is it original? Does it show intention?”

The legal system was built for a world where humans were the only ones making creative choices. Now we have tools that can generate, suggest, refine; suddenly nobody knows how to measure what the human contributed.

So, they default to the simple rule: No human author, no rights.

It’s the same logic that denied Naruto. The photos were creative. They showed artistic choices: framing, light, expression. But without a human holding the camera, the law had nothing to protect.

We’re living that same logic right now. You make hundreds of creative decisions working with AI. You choose what works and what doesn’t. What to keep, what to throw away. That’s not accident. That’s intention.

How much human involvement is enough?

Who decides? Where’s the line?

The Copyright Office won’t tell you. They’ll just evaluate your work after the fact and decide whether you crossed some invisible boundary between “tool” and “creator.”

And the law can’t keep up. We’re still litigating cases from three, four, five years ago. AI evolves daily. By the time a court decides what was acceptable in 2021, we’re already working with completely different systems in 2026.

The question isn’t whether AI deserves copyright. It’s whether creators working with AI deserve protection for the choices they’re making.

Right now, the answer is: only if you can prove you did more than the AI did.

Good luck measuring that. Try asking ChatGPT that.

Could a 25% revenue model work for AI and creators?

Naruto’s settlement wasn’t about who was right. It was about ending a fight nobody could win.

The photographer didn’t get full ownership. The monkey didn’t get recognition as the creator. They landed on 25% of future revenue going to macaque conservation. Not because it was fair, because it was something.

And that number didn’t come from judges or juries. It came from two parties trying to figure out what made sense when the rules didn’t fit the situation.

How about applying that same thinking to AI?

Right now, AI companies take trillions of pieces of creative work - articles, images, code, music - to train their systems. What comes out isn’t what went in, so it’s transformative. Fair use.

Meanwhile, creators get nothing. No payment. No permission is asked. No transparency about what was used or how.

And creators using AI get nothing either. No protection for the hours spent refining prompts and making creative choices. No way to prove, or move beyond human only right.

What if both sides got something?

What if a percentage of the trillions in compute costs went back to the creators whose work trained these systems? Not full ownership. Not a veto over AI development. Just a cut that acknowledges their work made this possible.

And what if creators working with AI got protection for their output. Not full copyright, but something that recognizes the creative choices they’re making?

The monkey got 25%. Photographers using AI get zero. The creators whose work trained the AI get zero.

What if we stop arguing about who deserves what and start asking what makes sense when creativity isn’t cleanly human anymore?

That’s not a legal answer. It’s a practical one. And right now, we’re not even having that conversation because we’re too busy shaming each other.

What creative choices are you making with AI that nobody sees?

We’re all Naruto now. Picking up tools we didn’t build, making creative choices, the law doesn’t know how to recognize.

What are you not admitting you’re using AI for? What creative choices are you making that nobody sees because you’re afraid of what happens if you’re honest?

That silence is the problem we need to solve. Not with more lawsuits. Not with more shame from either direction.

But by talking about what works, what doesn’t, and what fair looks like when creativity isn’t cleanly human anymore.

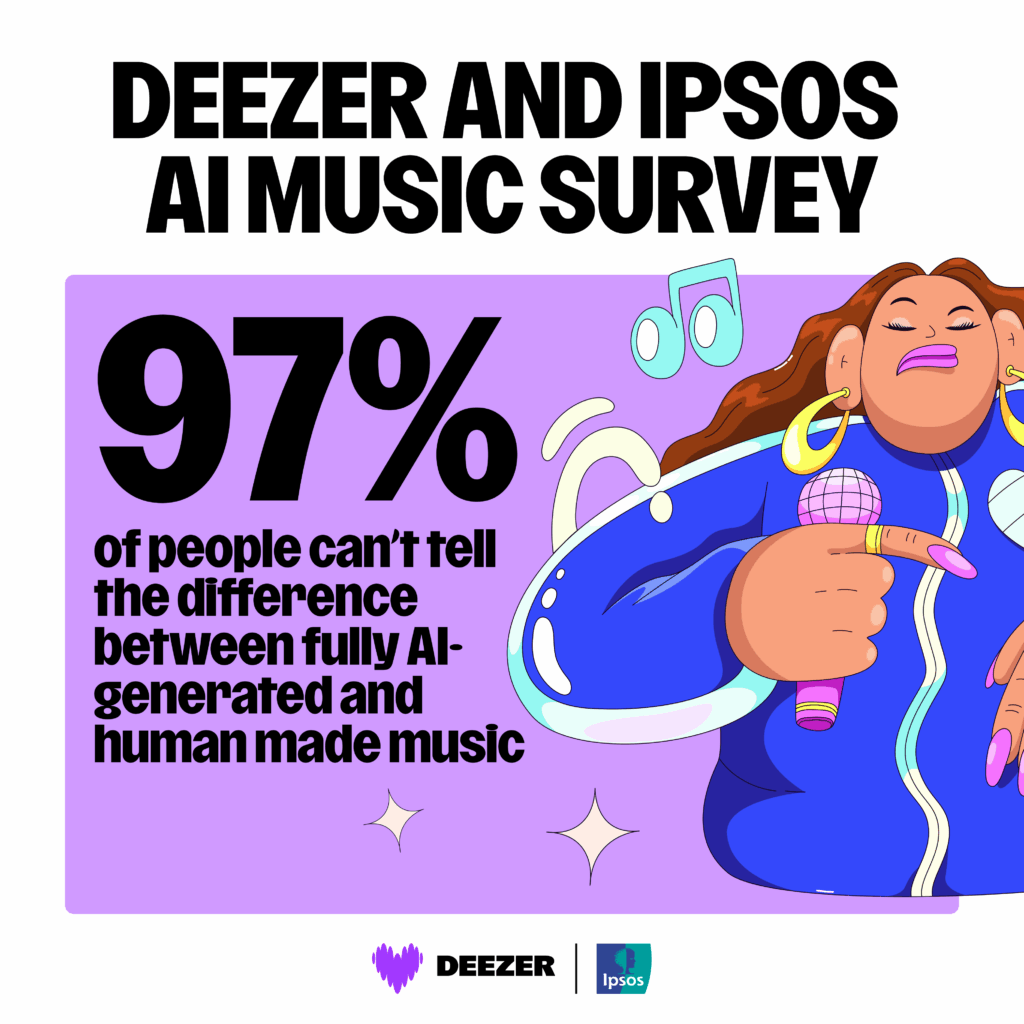

We’re teaching primates to use tablets. AI is writing poetry and making music people listen to. Intelligence and creativity are showing up in forms our grandparents couldn’t have imagined.

We’re either going to keep pretending it isn’t or start building something admitting the reality: creativity knows no species barrier. And maybe that’s not something to fear.

Maybe it’s something to figure out together.

We’re monkeys learning to use a new camera called AI. We’re not just monkeys. But even if we are, we deserve better than nothing for our work.

The conversation starts when the hiding stops.

RESOURCES

Sulawesi Video - Restless Generation (Where Naruto was)

Deezer/Ipsos survey: 97% of people can’t tell the difference between fully AI-generated and human made music – clear desire for transparency and fairness for artists