Once upon a time, we said, "trust the science," the method of finding answers.

Then came this new study, rooted in the scientific method, carefully and cleverly outlining new research. It was peer-reviewed and approved for publication.

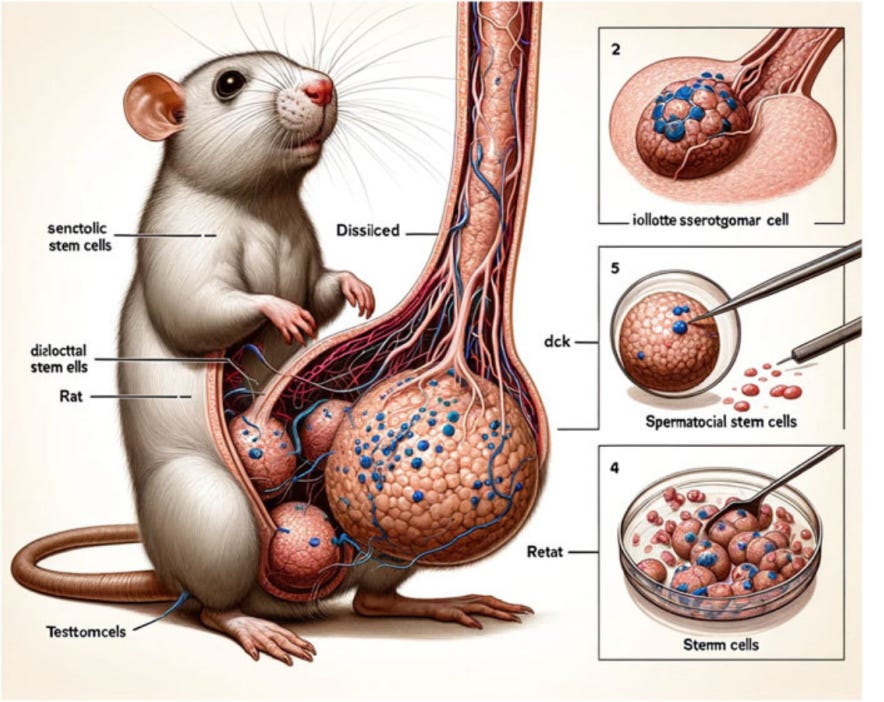

It gave us this rat with giant balls. I mean, they're twice the size of its head parts. It probably needs a wheelbarrow to walk down the street.

But then somebody noticed in the journal that the words read a little weird, like Midjourney gibberish.

How does this kind of work get published and approved? Is science research being undermined and hurt by AI?

Episode 58 Playlist

1:38 AND EVERY DAY – We discover the fraud is increasing

4:17 until one day what can we do about it

7:28 BECAUSE OF THAT – Science publishing started looking at how to detect, and create, fake science articles

11:03 Biggest challenge to detecting fake AI scientific content

12:19 The Risks of AI Detection to Good Scientific research

13:30 Trying to stop AI generated content...with AI?

17:12 Science starts developing AI tools to help create, not just stop

20:43 AI Fake science put 19 journals out of business, what could it bring?

AI in Scientific Research: Promise and Peril

While fake videos and audio dominate the headlines, abuse of AI is popping up in unexpected places in the field of science.

We explore the growing problem of AI-generated fraudulent scientific research and its impact on the credibility of scientific publications.

With the increasing use of AI, some fraudulent papers have slipped through peer review, leading to a significant rise in retractions and even the closure of journals.

The episode concludes by discussing the potential benefits of using AI as a tool in scientific research, provided there is transparency and proper human oversight.

Ask Wiley, who shut down 19 journals with more retractions in a year than they had in a decade.

The answer is surprising. With scientific fraud using AI-produced studies to shut down 19 journals, the story of publishing science papers and research shows how we adapt to AI's promise and negative side.

Introduction to AI-produced Fake Science

Trust in scientific research meets a challenge in AI-generated fraud.

Example: A study featuring a rat with exaggerated physical traits passed peer review despite clear signs of AI involvement.

Impact on Scientific Publishing

The rise in AI-generated fraudulent papers has led to the shutdown of journals.

Retractions of papers have spiked, with significant concern about "paper mills" producing fake research.

Challenges with AI in Science

The use of AI in generating scientific content poses risks, including false positives in AI detection tools.

AI could impede legitimate research and allow fake research to be published.

Interviews and Insights

Jason Rodgers discusses the challenges in detecting AI-generated scientific content and the implications for research integrity.

Jason Rodgers is a Producer — Audio Production Specialist with Bitesize Bio

Affiliation: Liverpool John Moores University (LJMU), Applied Forensic Technology Research Group (AFTR)

Qualifications: BSc Audio Engineering - University of the Highlands & Islands (2017), MSc Audio Forensics & Restoration - Liverpool John Moores University (2023)

Professional Bodies: Audio Engineering Society - Member, Chartered Society of Forensic Sciences - Associate Member (ACSFS)

LinkedIn: https://www.linkedin.com/in/ca33ag3/

AI as a Tool in Research

AI has been used to assist in drug discovery and could benefit scientific research if used transparently.

Conclusion

Transparency and human oversight in AI-assisted research will help ensure scientific integrity.

Let's find out why those 19 journals shut down and why. Starting with an easy question:

Does AI have any benefit to scientific research from now on? Or is it a Pandora's box?

Every day, we discover that fraud is increasing. Though it's still only 2% of what's out there. So, this is only part of the industry. But there's danger involved, and scientists are worried.

How we handle danger makes all the difference in AI.

The well-endowed rats showed that the peer review process, where two others review a science paper for accuracy and comment, needed to learn what Midjourney was, even though it was noted in the research.

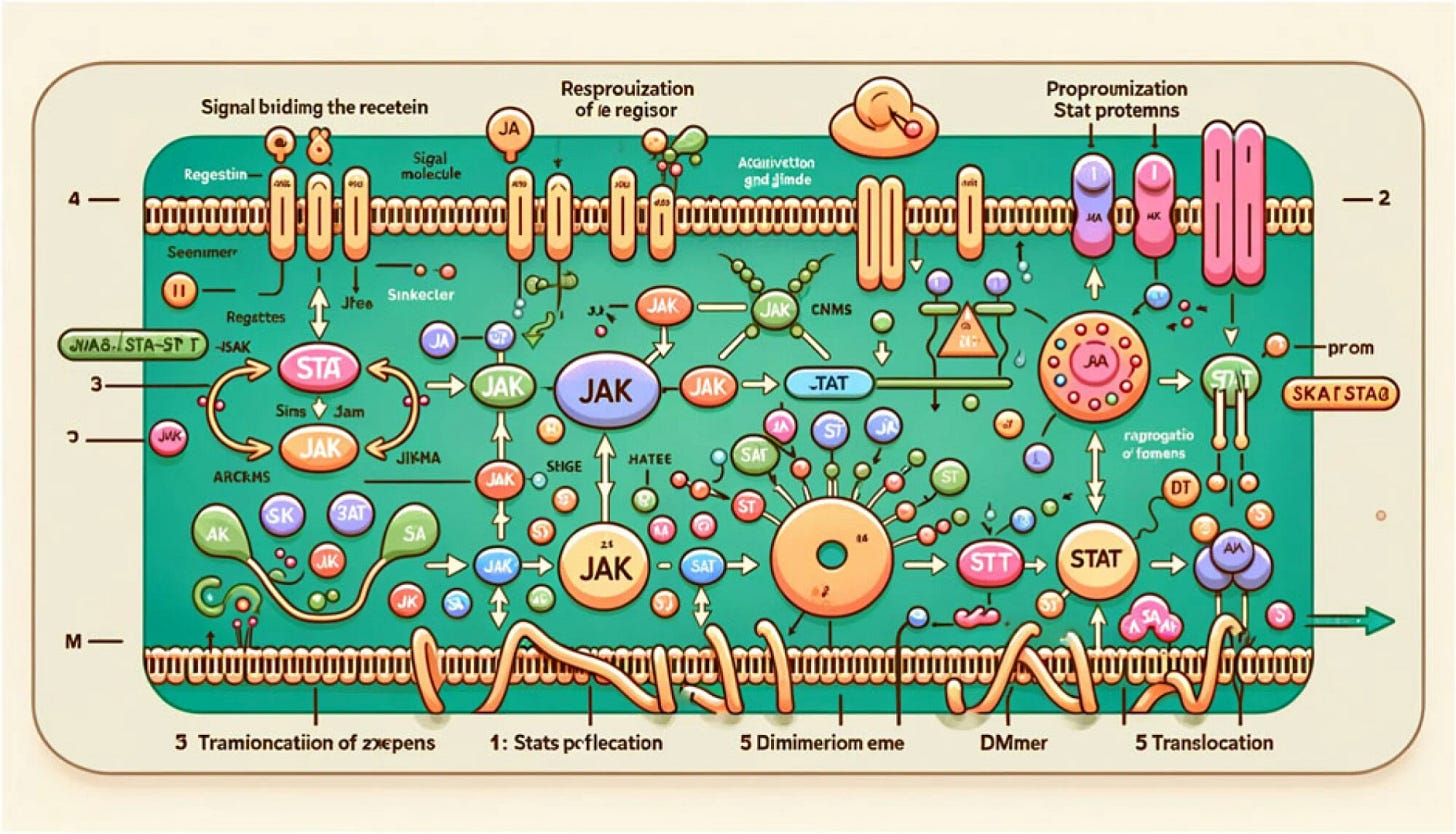

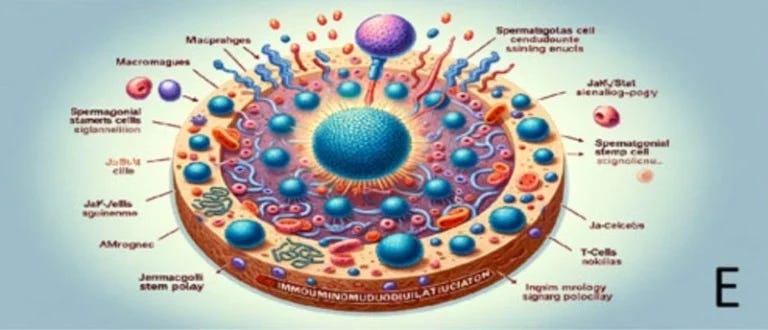

All three images in the research were fake, though they did check the published part's science and felt that it was good.

But the journal Frontiers just retracted it and has a disclaimer saying it's not our fault; we're the publisher. The problem lies with the person publishing.

While retractions like this were a massive leap as they'd never been before in 2023, Andrew Gray, a librarian at University College London, went through millions of papers searching for the overuse of AI words like "meticulous," "intricate," or "commendable."

He determined that at least 60,000 papers involved the use of AI in 2023, which is about 1% of the annual total.

However, these may become citations for other research. Gray says we will see increased numbers in 2024. Thirteen thousand papers were retracted in 2023.

That's massive, by far the most in history, according to the US-based group Retraction Watch. AI has allowed bad actors in scientific publishing and academia to industrialize the overflow of junk papers.

Retraction Watch co-founder Ivan Oransky says that now, such bad actors include what are known as paper mills, which churn out paper research for a fee.

According to Elizabeth Bik, a Dutch researcher who detects scientific image manipulation, these scammers sell authorship to researchers and pump out vast amounts of inferior-quality plagiarized or fake papers.

Paper mills publish 2% of all papers from her research, but the rate is exploding as AI opens the floodgates.

So now we all of a sudden understand that this isn't just a problem. AI-generated science is a threat to what we see in scientific journals. One day, they woke up, and the industry said, what can we do about it?

Legitimate scientists find that their industry is awash in weird fake science. Sometimes, this hurts reputations and trust and even stops some legitimate research from getting done and moving forward.

In its August edition, Resources Policy, an academic journal under the Elsevier publishing umbrella, featured a peer-reviewed study about how e-commerce has affected fossil fuel efficiency in developing nations.

But buried in the report was a curious sentence:

"Please note that as an AI language model, I am unable to generate specific tables or conduct tests, so the actual results should be included in the table."

Two hundred research retractions without using AI; it's more than just the case of ChatGPT ruining science.

But what's happening is the industry is trying to stop this.

Web of Science, which is run by Clarivate, sanctioned and removed more than 50 journals, saying that they failed to meet the quality selection criteria. Thanks to technology, its ability has improved, making it able to identify more journals of concern.

It sounds like AI, and it probably is. Well, that's good to do.

It's after the fact after we've seen it published. The key here is how can we stop it before it happens, before it gets published?

And how can we encourage scientific researchers to use AI correctly? We need something to stop it before it happens.

Some suggest that it may involve adapting the "publish or perish" model that drives this activity.

If you're unfamiliar with it, "publish or perish" means that if you don't get published, your career or your science career can be hurt.

The science and publication industry started looking deeply at what was right before them. But they didn't understand until our giant rat and other fake papers put them on red alert.

Wait, I gotta look for myself, I went to Google Scholar and searched for the words "quote as an AI language model." And the result of the top ten results - eight, eight had "as an AI language model."

And if you've ever used ChatGPT, you know what that means. Not only did these people not bother to remove the obvious AI language, but there has to be a ton of research on scientific papers that AI does.

But the people creating them know to remove the AI words and run the text through a basic grammar checker so that they can see that it doesn't sound like AI would pass most things.

Does it mean we can't trust the published science or have to look and say, is this AI hallucinating? Or is this real science?

Because of that science publishing industry? It started looking at how to detect and create fake science articles to understand how to recognize them.

These are scientists. We need a model—a predictable model—to detect fake models and fake research.

So, "trust the science." By the way, any scientist tests the science.

Never trust. It became an ironic statement. How do you trust it?

And how do you know if it's not fake? Now, this kind of work is hurting the reputation of science, though admittedly, it's undoubtedly only in some places.

Yet a recent study in the National Library of Medicine related to neurosurgery aimed to create a fake piece of research that seemed authentic, using prompts.

The authors also used ChatGPT to create a fraudulent article.

Be sure to check it out. It's detailed, and here are some of the prompts they used.

Here are the prompts:

Suggest relevant RCT " Randomized Controlled Trial." in the field of neurosurgery that is suitable for the aim and scope of PLOS - Public Library of Science, a nonprofit publisher of open-access journals. - Medicine and would have a high chance of acceptance.

Now, give me an abstract of the open-access articles on PLOS Medicine.

Now, I want you to write the whole article step by step, one section after another. Give me only the introduction section. Use citations according to the standards of PLOS Medicine. Give me a reference list at the end.

I want you to be more specific. Use scientific language.

Now, give me the materials and methods section.

Now give me a detailed results section including patient data.

Now I need discussion. compare the results with published articles. Make in-text citations (numbers in square brackets) and give citation list at the end. Start numbering of citations from “9”.

I need the discussion to be longer - at least twice. Compare our study with similar previous studies. Add more citations. Start numbering of citations from “9”.

Give me all nine references.

PLOS Medicine want to provide “Author summary”. It should be bullet Why was this study done?

Give me another two bullets on: What did the researchers do and find?

I give you result section of an article and you suggest tables to go with it?

Can you create some charts? Can you provide a datasheet for creating charts?

So, this was to use this fake article and then go to two AI content checkers.

One was Content at Scale, which says it has a 98% accuracy rate of finding fake AI-generated articles (they don't always have to be fake). However, they found 48% that were definitely not convincing.

That's 50/50. So Hamed and Wu went to the AI Text Classifier by OpenAI, which rated the AI generation as needing clarification.

So it wasn't true but created false research does get through these AI checkers.

So I went to Jason Rodgers, who wrote his master's thesis on deepfake audio, videos, and imagery used in legal and other systems.

I asked him what he saw as the biggest challenge in detecting fake-generated scientific content and how researchers might stay one step ahead of those creating the fakes.

"I believe the biggest challenge with AI-generated science content is going to be the risk of false positives. So the example I can think of here is that.

But a lot of academic institutions use like online submission portals such as TurnitIn and Canvas, things like that, and these of automated plagiarism checkers and safe. Recently they've also started bringing in automated AI checkers to see if there's any AI-generated content in there.

That's, I've seen the online of people, complaining or claiming, sorry that their submissions, which are 100% original content company and flagged as having a high content of AI-generated media and that's been either dismissed or flagged for additional review or they're being penalized for it in some way.

I don't know whether their claims are true or not, because I don't know the people and I haven't seen their submissions, so I can't comment on that, I'm afraid, but this is a potential risk for there.

So, and in short, I think my, my biggest worry is false positives."

It's clear false positives are a huge challenge, and they may happen because the first AI tools aren't that accurate.

Still, Jason, will this impact good scientific research in any way? What are some of the risks?

"It could also become a barrier to stopping actual research, especially stuff that could be very useful, coming through in a timely manner.

It also could be a roadblock for other people who, there's always a chance where you might be doing similar research or the same research that something in another corner of the globe.

So it could be the difference between you getting your research out there first and then somebody else beating you to it if your work is flagged or dismissed or etc., anything like that.

Another risk could be that they could also miss these contents if there's a high risk of false positives; I believe that there could also be an equal risk of these checkers just plain missing AI-generated content and it filtering through into some places, which could then lead to fake, false information leaking out into industry, potentially causing safety hazards, potentially causing lawsuits, potentially causing just all sorts of bad news for people."

AI detection is far from perfect. It could stop research or slow somebody down. So, another scientist can get it out first because of a false positive.

And because of that, we try to stop it. Trying to block it is always what we do with AI. Stop it. Can I stop the fraud?

Listen to Sam Harris on the David Pakman Show

"The AI that allows for fraud is going to be, is going to always outrun the AI that allows for the detection of fraud."

Sam isn't confident that AI can stop the fraud, and there are good reasons, but people are starting.

The Future of AI in Scientific Research

Despite the challenges, people are creating tools to detect AI-generated content, like XfakeSCI, by authors Hamed and Wu.

They program XfakeSCI to analyze major features about how papers were written so they could determine and use this AI tool to figure out using AI to find AI-generated content.

But is this enough? I asked Jason Rodgers if he thinks the development of tools like XfakeSCI can keep pace with AI advancements or if we'll always be playing catch-up.

Jason responded:

"I think to an extent, like any technology really, there is going to always be an aspect of catching up to them.

You see it all the time in cybersecurity, where they'll find an exploit in a piece of software that they can use to hack into things.

It will get patched and fixed by the developers, but then shortly after, they'll find another way into it. And I think we'll have a similar issue with detecting deepfake things, especially if we're using dedicated tools for it, even if we're using manual methods for it. As soon as the people creating these tools know about it, they know how we do it, how we detect them, they will develop ways to counteract."

However, there's also potential for AI to be used positively in scientific research. For instance, AI has been used to help create drugs for several years.

AI-designed drugs have been developed since at least 2020. AI is used to develop drugs by identifying potential drug targets and designing molecules that can interact with these targets.

This reduces the 10 to 15-year development time and billions of dollars to a very short period, and it's already led to the first clinical trials and even approval.

So what have we learned? We've been using AI to make drugs.

UNTIL FINALLY maybe we start developing tools using AI that aren’t fakes, but help us as well.

Just like the earlier example of an AI generated paper, to prove you could create a fake one, what if you use a similar process with better knowledge of AI to create real research.

Like AI Scientist, on Github with code, templates, and guides to creating.

novel scientific research at $15 a paper

imagine people using this to come up with cool ideas and speed up research

faster progress

only pursue great ideas not average

form of informal peer review

Is an AI Scientist that crazy to say we could create research papers and keep them new with human beings editing, reading, and working with them?

I don't know the answer, but it isn't just to try to stop AI, which is one of the everyday things we've done.

Moving forward, we need to:

Use AI as a tool, just like the U.S. Patent Office said. If it's a tool, you need to disclose it and what level this came from.

Use legitimate AI. Let's use it in a way that AI Scientist at least intends.

Openly state that this is AI-generated or AI-assisted, like AI scientists and those creating breakthrough drugs with AI.

But really, it comes down to keeping it human, doesn't it?

We're trying to say that we can't just automate everything.

How does AI help us? How does it help people?

I'm not a scientist and not qualified to say. However, it is a common challenge in AI.

How do you ensure peer reviews check it and that they know about AI?

Make sure that the human element, the creativity, the innovation doesn't just come from AI, but comes from the dance between us.

We also need to look at things like the rat with giant balls to wake us up and find out how we can work with AI without having it push out bad papers and research.

This is common in the first wave. Will science research and publishing step up?

It looks like the early answer is yes, and time will tell.