The TikTok Blueprint: When Silicon Valley Startup Advice Becomes Government Policy

Taking advantage of AI before it takes advantage of you – especially when governments are writing the playbook.

First, they came for our content. Now they're rewriting the law books.

"Make me a copy of TikTok. Steal all the users. Steal all the music. Put my preferences in it."

Then, if it takes off, "hire a bunch of lawyers to go clean the mess up."

That Silicon Valley playbook – steal first, settle later – is startup wisdom becoming government policy.

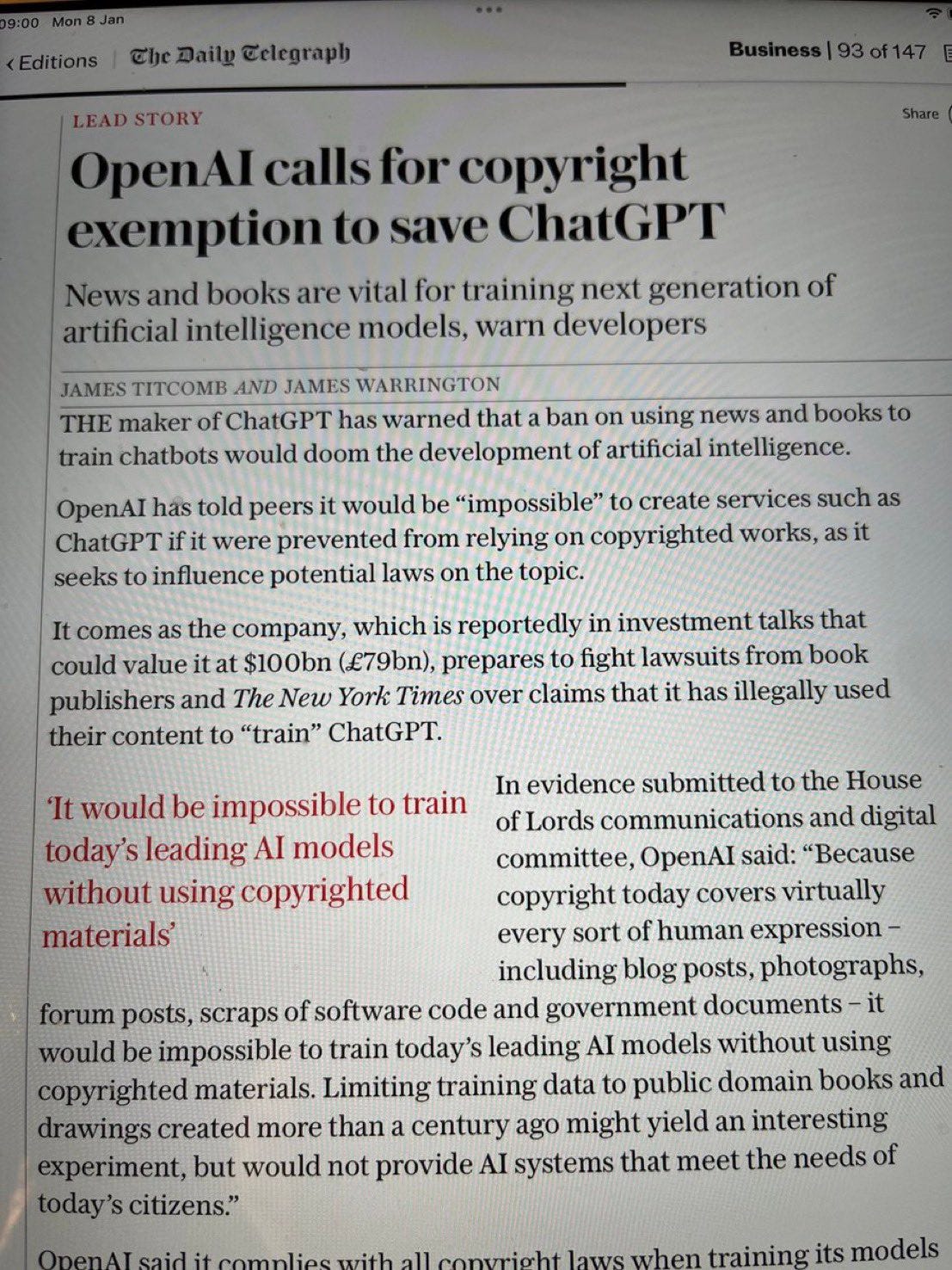

And it's playing out in real time across UK and US copyright battles, where AI companies and political power are deeply aligned against creators.

Almost like AI is too big to fail.

Sacrificing property rights (because that's what copyright is – a property right) on the altar of tech supremacy.

UK: The AI Training Opt-Out Lottery

The UK government's proposal is simple: AI companies can scrape whatever they want. No permission is needed. Creators must actively opt-out. Good luck with that.

As Baroness Kidron, an independent filmmaker and presenting the case to UK politicians puts it:

"The plan is a charter for theft, since creatives would have no idea who is taking what, when and from whom.”

The policy architect? Matt Clifford – a tech investor with conflicts "so deep" it could be a TV drama.

His solution to prevent the UK from "falling behind"? Gut copyright law.

Meanwhile, big creators with lawyers are cutting deals left and right. Small creators get the opt-out lottery.

When challenged on fairness, Tech Secretary Peter Kyle defended removing transparency requirements because

"it would not be fair to one sector to privilege another."

Baroness Kidron's response cuts deep:

"It is extraordinary that the government's decided, immovable, and strongly held position is that enforcing the law to prevent the theft of UK citizens' property is unfair to the sector doing the stealing."

US: Copyright Office Chaos

Plot twist across the pond. The US Copyright Office on May 9 releases a bombshell report siding with creators. Then a day later, the Trump administration fires its author, Shira Perlmutter.

Her replacement? A DOJ attorney with "no expertise in the field."

The turmoil is real. As Graham Lovelace notes in his excellent tracking of this copyright chess match:

"Doubts now also exist over whether the office's fourth report will ever see the light of day."

The Pattern Emerges

AI companies plead poverty while sitting on tens of billions in funding, aiming for trillion-dollar valuations.

They've bought five years of free data with fair use arguments.

What other industry openly takes what it wants, settles only with players who can afford lawyers, and claims it can't pay for the raw materials that built their entire business model?

Both sides of the Atlantic are choosing AI supremacy over creator rights. The Stanford "hire lawyers later" strategy has become official policy.

This isn't just about big tech versus artists. This is about who gets to participate in the AI economy.

When governments write the rules for free data extraction, only the biggest players win.

Small AI companies? They'll still need to pay. Individual creators? They get nothing. The middle gets squeezed while the top and bottom play by different rules.

When governments write the rules for digital content extraction, who's really getting optimized here?

The blueprint is clear. The question is whether we'll recognize it before it's too late to rewrite the rules.

Part 2: The UK AI Opt-Out Impossibility

Here's where it gets absurd.

The UK government's proposal? AI companies scrape everything. No permission. No cost. Creators just need to "actively opt out."

Now if I write a book, I'd have to contact ChatGPT: "Opt me out." Then Claude: "Opt me out." Then DeepSeek: "Opt me out."

You get the picture. It's the automated opt-in problem that's plagued the internet since day one.

Except now governments are telling creators:

"You handle it. What's the problem? You're in control."

48,000 People Disagree

This week in the UK, the pushback exploded. Artists previously filed a 48,000-signature petition.

Parliament votes went in favor of transparency requirements. This might force AI companies to disclose what they're using and giving creators real opt-out control.

Though the general feeling is that won’t happen. Why?

Tech Secretary Peter Kyle's defense?

"It would not be fair to one sector to privilege another."

Enforcing existing property law is now "privileging" creators over the companies taking their work?

Baroness Kidron nails it:

"It is extraordinary that the government's decided, immovable and strongly held position is that enforcing the law to prevent the theft of UK citizens' property is unfair to the sector doing the stealing."

The AI Patterns

Both sides of the Atlantic show how closely aligned governments and AI companies have become. In DC, it's not even subtle anymore.

Where do creators play this game? Will the US report survive?

Will we stand up like 48,000 in the UK?

If you're a creator, this isn't theoretical anymore. You don't have years to figure this out.

The rules are being written right now, with or without you.

Part 3: The Solution Exists – But Only if Companies Want to Pay

The pushback to forcing creators to opt-out individually? There's already a solution.

Meet Credtent CEO Eric Burgess, who's built exactly what the market needs – if AI companies want to play fair.

The AI model has been, data is free.

"It's not. And it's a total Silicon Valley thing.

Eric Schmidt outlined it last August at Stanford: steal, bring in the lawyers."

If governments force AI companies to pay for content, does that kill startups and only help the big players?

Credtent's Tiered Approach

Burgess has a different take:

Startups get revenue sharing – "We can set them up with a revenue share opportunity, they can license early"

Vertical companies pay by category – Only license what they need for specialized solutions

Enterprise pays premium rates – Full access, full price

Prices drop as scale grows – More creators joining = lower costs for everyone

The Compliance Reality

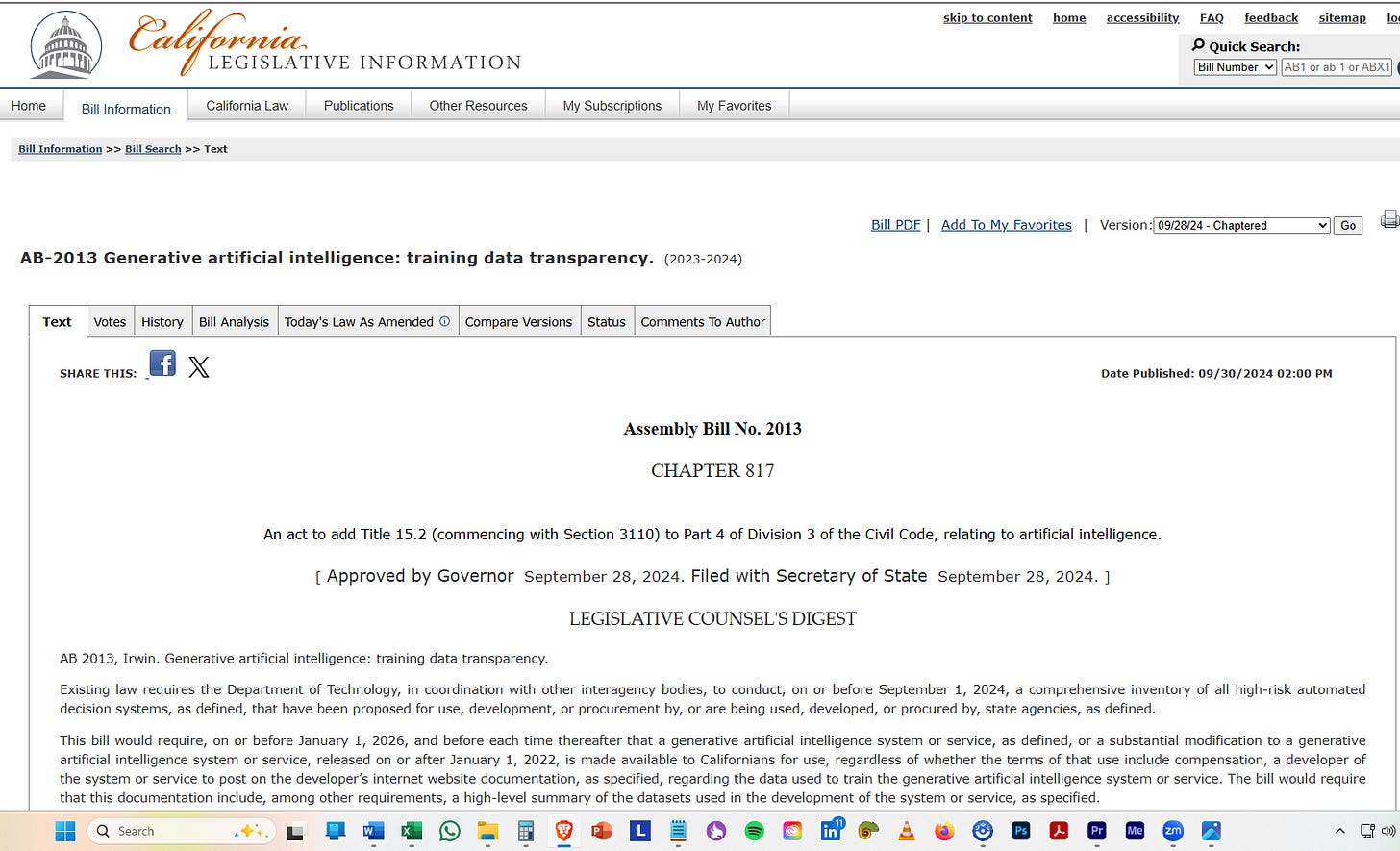

AB 2013 passed here in California .

January 1st, 2026, AI companies are going to be required to disclose what content they've used for training. The EU has similar requirements.

Some AI companies claim they can't disclose training data because it's a "trade secret."

Credtent solves this: companies upload their training corpus, and the platform identifies what's licensable, public domain, or problematic.

AI Copyright Guardrails That Work

"We know guardrails are possible. If you say,

'I want to create a story like Stephen King,'

they're afraid of Stephen King because he has the means of hiring lawyers."

Burgess envisions a future where creators choose to get paid for AI using their style, or block it entirely.

Like James Earl Jones licensing his voice for future Darth Vader content before he died – "his family is now getting paid for AI use of his voice."

"You're taking value and extracting the value from that work and creating market replacements potentially."

Even bad AI-generated content hurts creators:

"All it has to do is make something similar that they can pay a little bit of money to have go higher up on the list of what's found on Amazon.

And guess what? They just hurt your book."

Any excuse that they had for saying it's going to be too hard to do licensing?

Credtent, and others, already solve that problem for you.

The infrastructure exists. The question is whether governments will force AI companies to use it – or keep writing laws that let them take whatever they want.

Part 4: The AI That Thinks Like You Do (Without Stealing Your Thoughts)

While governments debate letting AI companies raid everyone's content, Dr. Stephen Thaler built something completely different.

His DABUS system doesn't need your data because it thinks more like you think.

"When we talk to these chatbots online, we're looking at the cumulative opinion of many contractors," Thaler explains.

"It's not really sentience. It's not really conscious.

Nor is it really thinking for itself."

Current AI? It's human opinions filtered through human opinions.

No real emotion. No actual thought. Sophisticated pattern matching.

Thaler built two neural networks that literally argue with each other:

The Generator creates a stream of potential ideas

The Critic watches and gets frustrated when solutions don't emerge

When frustrated, the critic injects more "disturbances" into the generator

"That looks like consciousness," Thaler realized.

"You have a stream of consciousness, a stream of ideas coming apparently from out of nowhere. And you also have a critic getting frustrated."

The Neurotransmitter Breakthrough

This mirrors how your brain works. Thaler discovered his system was replicating "global release" – when your brain floods itself with neurotransmitters during emotional states.

"There's nothing magical about a neurotransmitter.

It's a molecule that can either increase or decrease neural activity randomly, stochastically. And that's your noise."

That "noise"? It's emotion. It's the voice in your head that says "do something" before you consciously decide to act.

DABUS doesn't steal content to learn creativity. It generates emotion, gets frustrated, has eureka moments, and creates original ideas.

Because it's built like a brain, not a database.

While everyone fights over who owns training data, Thaler built AI that doesn't need it. The future of AI might not be about who can scrape the most content, but who can build the most human-like consciousness.

That voice in your head saying "wait, what if..." before you have a breakthrough?

DABUS has that too. And it didn't need to steal anyone's work to get it.

The AI Copyright Crossroads – Where Creators Make Their Stand

We're at a crossroads in AI. On one side: big tech and governments. On the other: everyone else.

This isn't just US and UK drama. Meta, Google – these international companies are working country by country to ensure "AI works for them." Makes sense from their perspective.

But here's their line:

"We can't pay for content. We need too much. It would ruin AI."

Remember that UK politician?

"It's a privilege. You're asking for a privilege".

When creators want compensation for work that was clearly taken without permission.

The Poverty Bluff

AI companies plead poverty while sitting on tens of billions in funding, aiming for trillion-dollar valuations. This fair use bluff has bought them five years of free data since 2020.

The mask slipped in a Meta lawsuit. A director of engineering admitted: If we license one book, then we have to license them all and fair use is out the window.

The Two-Tier Reality

Disney gets licensing deals. Getty gets deals. Anyone with lawyers gets paid.

Everyone else? You get the opt-out lottery.

That is why Baroness Kidron is fighting this. That's why Shira Perlmutter is fighting for her job.

When the replacement doesn't even understand copyright, like this Department of Justice attorney with no domain experience, creators lose their voice.

The US Copyright Doc That Changes Everything (If they use it)

There's a US Copyright Office report sitting there that could literally change the AI industry because it leans towards compensating creators.

And nobody's listening. Nobody's talking.

What other industry openly takes what it wants, settles only with players who can afford lawyers, and claims it can't pay for the raw materials that built their entire business model?

The infrastructure exists. Credtent proved licensing can work at scale. Dr. Thaler showed AI doesn't need stolen content to be creative.

The legal frameworks are there.

But if creators don't stand up now – while these rules are being written – the window closes. The big players cut their deals. Everyone else gets crumbs.

The TikTok blueprint worked for startups. Now it's looking like a government policy.

The question isn't whether this system benefits AI companies – it obviously does.

The question is: do we want an AI future where only the biggest players get to participate?

Your move, creators. The blueprint's being written with or without you.

Taking advantage of AI before it takes advantage of you means understanding the game being played. These aren't accidents – they're strategies. What's your strategy?

LINKS FROM PODCAST

Victory again for tireless AI transparency campaigner. Will the government now act?

Trump wins first round in copyright chief's job battle

FAQ: Is AI training data a trade secret?

Copyright and Artificial Intelligence, Part 3: Generative AI Training Pre-Publication Version

Five Takeaways from the Copyright Office’s Controversial New AI Report

------------------

Stephen Thaler is at:

------------------

California Delete Act

AB-2013 Generative artificial intelligence: training data transparency.