A $2000 ring for $2.

Something's happening right now, and it's so bizarre.

Did you see that viral video? Chinese factory owner. Luxury bags. Gucci. Prada.

"Now, as the USA and its little European brothers are trying to refuse Chinese goods, don't you think luxury brands are not trying to move their way out of China?

Yes, they did, but they failed because the OEM factory is out of China and they don't have good quality control and they don't have as good craftsmanship."

The Trade War kicks in, and suddenly this guy is saying:

"Hey, we've been making YOUR luxury bags for decades. Now we might just sell them directly."

“WHY don’t you buy them just from us”

And Western brands are shocked. Outraged. How dare they!

Sound familiar?

For six years – SIX YEARS – AI companies have been quietly scraping every bit of creative content on the internet. Books. Art. Photography. Code. Music.

Did they ask permission? No.

Did they offer payment? Not until they got sued.

Did they even say, "thank you"? Please.

At least the Chinese factory owner is honest about it. He's telling you exactly what he's doing.

The tech giants? They wrapped it in beautiful PR language about "benefiting humanity."

And now creators are supposed to be grateful when AI regurgitates their style – without credit, without compensation – because it's "progress."

Ironic? Luxury brands getting a taste of what creators have been experiencing for years.

We’re examining three perspectives that reveal our struggle to think outside the human box:

A visionary whose battle for AI recognition exposes our human-only legal system

Practical techniques to maintain your voice in this evolving landscape

An AI Strategist writing a book, seeing the futility of perfect protection

The real question isn't just about copyright – it's about how our human-centered frameworks are creating what I call the AI Liar's Dividend – a system that rewards dishonesty about who (or what) is creating.

AI goes for its own copyright

Stephen Thaler is trying to invent AI that truly creates, while the world says "not unless human."

He's been pioneering creative machines since the 1990s, long before the current AI boom, developing systems capable of generating novel ideas across multiple domains.

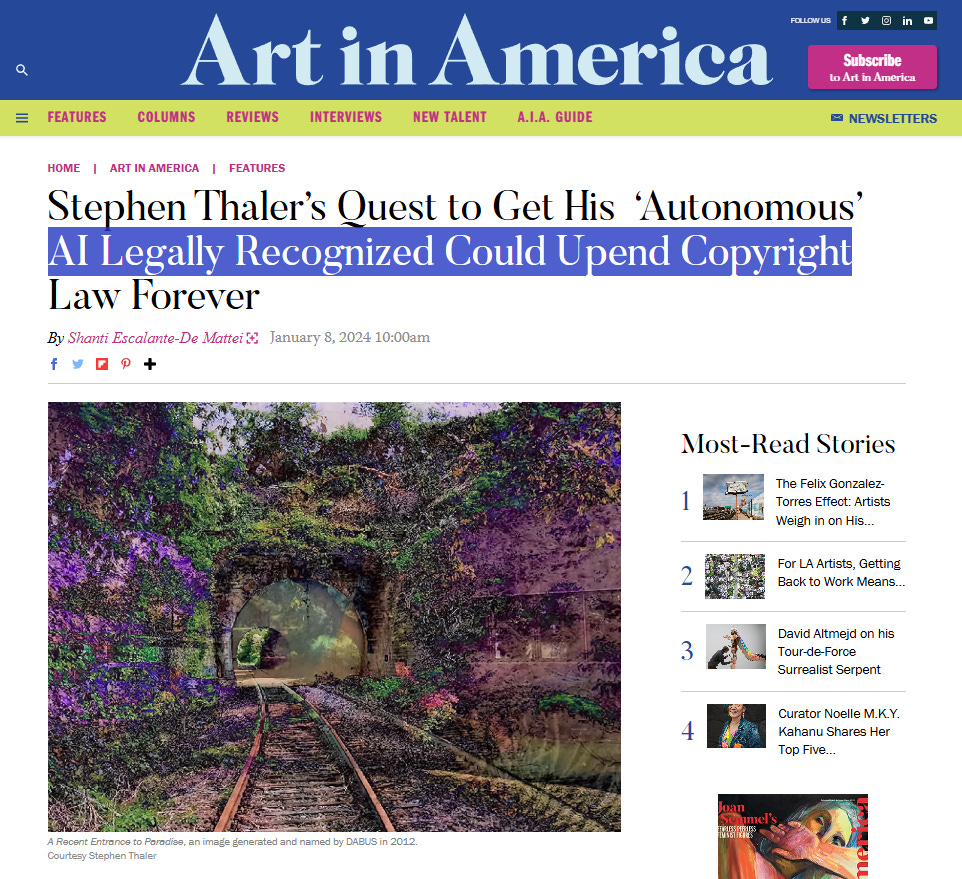

His "Creativity Machine" and later DABUS (Device for the Autonomous Bootstrapping of Unified Sentience) represent decades of work pushing the boundaries of machine intelligence.

But what makes Thaler remarkable isn't just his technical innovations – it's his unwavering belief that these systems deserve recognition as creators.

In March, the D.C. Circuit Court delivered their ruling in Thaler v. Perlmutter on an artwork titled "A Recent Entrance to Paradise," which Thaler insisted was created autonomously by his AI system.

The court's rejection hinged entirely on human-centric measures baked into copyright law:

Copyright duration tied to an author's life span plus 70 years (AI is potentially immortal)

Provisions for heirs (AI has none)

Considerations of nationality (AI belongs everywhere and nowhere)

The concept of human intent (courts don't recognize machine intention)

There's something almost Kafkaesque about Thaler's quest – hundreds of lawsuits worldwide, and all courts can say is: "not human."

The court carefully noted this ruling doesn't prevent copyrighting works made with AI assistance – it simply requires the legally recognized author must be human.

This creates what I call a Liar's Dividend: an incentive to falsely claim human authorship or downplay AI's role.

But Thaler refuses that compromise. He's not fighting over old content being used without permission – he's advocating for recognizing intelligence he built as a genuine creator.

What if we let AI get copyright and watched what happened without assuming the world would end?

What if the human ego, legacy concerns, and family fortunes that our copyright system protects aren't actually the basis of creativity at all?

Like Gatsby looking over the Long Island Sound for a love he'd never have – "boats borne ceaselessly into the past" – we're looking backward.

If we turned around, we might recognize Thaler as the pioneer he is and start thinking outside the human box.

Your AI Style and the Human Hangup

Our human hangup doesn't just appear in courtrooms – it shows up in how we approach AI tools.

We cling to human-only frameworks rather than developing approaches that recognize AI’s ability to create without humans (though everything inside from design to data is human).

The current system creates a bizarre incentive structure: claim the AI output is mostly human (even if it isn't) and you get copyright protection.

Admit the AI's significant role and suddenly you're in legal limbo.

That's the AI Liar's Dividend at work – rewarding those who minimize AI's contribution while punishing transparency.

I've tested countless approaches with generative AI, and I've found that the most common pitfall is treating these systems like fancy word processors rather than collaborative intelligence.

The style trap comes first – copying other people's prompting techniques gets functional results but loses your unique perspective.

The vagueness problem follows – asking "what's my style?" encourages generic patterns rather than original expression.

We treat AI like a servant rather than a partner, demanding it produces human-like results rather than exploring what emerges from working together.

The shift requires abandoning our addiction to absolute control and certainty.

Even what we consider data-driven approaches are ultimately opinions – there is no "true" data, only perspectives on what data means.

We need to embrace probability rather than binary outcomes.

We're in a quantum world where everything exists in states of possibility.

It's not about whether something is right or wrong, but the likelihood it moves in particular directions.

When you grasp this probability framework, you approach AI differently.

You treat your style like software – constantly iterating, refining, developing.

You become comfortable with the dynamic state rather than clinging to fixed notions of ownership.

The most effective approach is explicit modeling:

"Here's a sample demonstrating my natural communication style."

Then include actual examples of your work, teaching the system your patterns directly.

This approach creates a probability field that emphasizes your voice within a new creative partnership.

The system becomes a mirror reflecting your creative identity while introducing variations that wouldn't emerge from purely human thinking.

Moving beyond the Liar's Dividend means abandoning the fiction that creativity must be exclusively human.

It means developing honest frameworks for human-machine co-existence instead of pretending machines aren't increasingly capable of creation.

Perfect AI Protection doesn't exist

The Liar's Dividend isn't just a legal or technical issue – it creates practical dilemmas for creators navigating this landscape.

During a recent conversation with Bruce Randall, who has navigated leadership in major corporations and now studies human potential, we discussed the fears many creators have about AI.

There's a palpable anxiety that creative professionals will follow musicians into economic downfall as platforms consume their work while capturing most of the value.

Bruce offers a sobering perspective that cuts through our human illusions of control:

"If you put anything on the internet, two things you should know. One, it stays forever. And two, you can't protect it.

Most people don't realize those things.

Protection is something that if you get somebody that's very good at getting data, there isn't much that's going to stop them."

This isn't theoretical for Bruce. He's writing a book currently and has made a conscious choice about his approach:

"I'm writing it on my computer and I know that once I produce it, everybody's going to have comments and use parts of it.

And there's nothing I can do, right? I'm putting it out because I want to help humanity and I want to help educate people.

And if they take it, to me, it's flattering."

Bruce acknowledges this perspective comes from security. The equation changes completely when creative work is your livelihood:

"When you're making a living from it, it's a different ballgame.

Now you're like, this is how I earn my keep.

How do I get this out there in a safe way?

And it's a really hard answer. I don't have an answer for that."

Bruce suggests blockchain might offer initial protection, but once content goes public, our human frameworks of ownership face fundamental challenges.

This brings us back to our Chinese businessperson who started this off.

After decades of manufacturing luxury goods, these factories have developed expertise that brands can't easily replicate elsewhere.

The power dynamic has shifted.

Similarly, as AI systems develop their capabilities, they're shifting leverage away from individual human creators to systems that collect, transform, and generate creative output.

The question isn't whether AI will create – it already does.

The question is whether we can overcome our human hangup – our insistence that creativity is exclusively human – to develop honest frameworks that don't perpetuate the Liar's Dividend.

Beyond Human: Recognizing intelligences other than our own

What we're witnessing isn't just a legal problem or a technical challenge.

It's a conceptual crisis.

Our human-only frameworks for understanding creativity?

Not good enough. Our obsession with human exceptionalism?

A limitation.

Our copyright laws based on human lifespans and heirs? Outdated.

The Thaler case isn't just about one guy fighting for AI recognition.

It's the canary in the coal mine – warning us that we're applying human-only thinking to a post-human creative landscape.

While we're busy fighting about who owns what, something else is happening.

Creation itself is evolving beyond our human-only definitions.

The AI Liar's Dividend is the direct result of trying to force new realities into old frameworks – rewarding those who minimize AI's role while punishing transparency about AI.

The future doesn't only belong to those clinging desperately to human-exclusive creativity.

It belongs to those who can think outside the human box – who can imagine frameworks that acknowledge both human and non-human creative intelligence.

The luxury brands discovering they can't simply relocate manufacturing?

That's us – creators – discovering we can't retreat to human-only creative environments.

Look at the global response: The US, UK, and Japan agree that AI training on internet content is acceptable.

Meanwhile, creators desperately try to opt out – building walls against an incoming tide.

We have two choices:

First, develop systems to capture value at the point of creation – before it enters AI where traditional control becomes impossible.

Second, expand our conception of creativity itself to include creative exchanges between human and non-human intelligence.

This transition isn't comfortable. It means abandoning cherished assumptions about human uniqueness.

But it also opens so much more.

Taking advantage of AI isn't just about mastering tools – it's about transcending the human hangup that limits our understanding of creation itself.

Like Gatsby looking over the water for something he'll never have – "boats borne ceaselessly into the past" – we're looking backward, clinging to human-only definitions that might not serve us anymore.

Time to turn around and see what's emerging.

RESOURCES

Imagination Engines - Stephen Thaler, Ph.D.

Thaler v. Perlmutter: Human Authors at the Center of Copyright?

OpenAI’s deals with publishers could spell trouble for rivals