Maybe AI magic is science not yet understood. Maybe AI is hype.

Maybe you wish it would all go away.

All are true, and opinions. There’s no way to prove them. And as human beings, when things change without “proof”, we kvetch.

It's not just technology changing—it's people's beliefs about it. Some embrace AI with religious fervor, others resist it like confused bystanders.

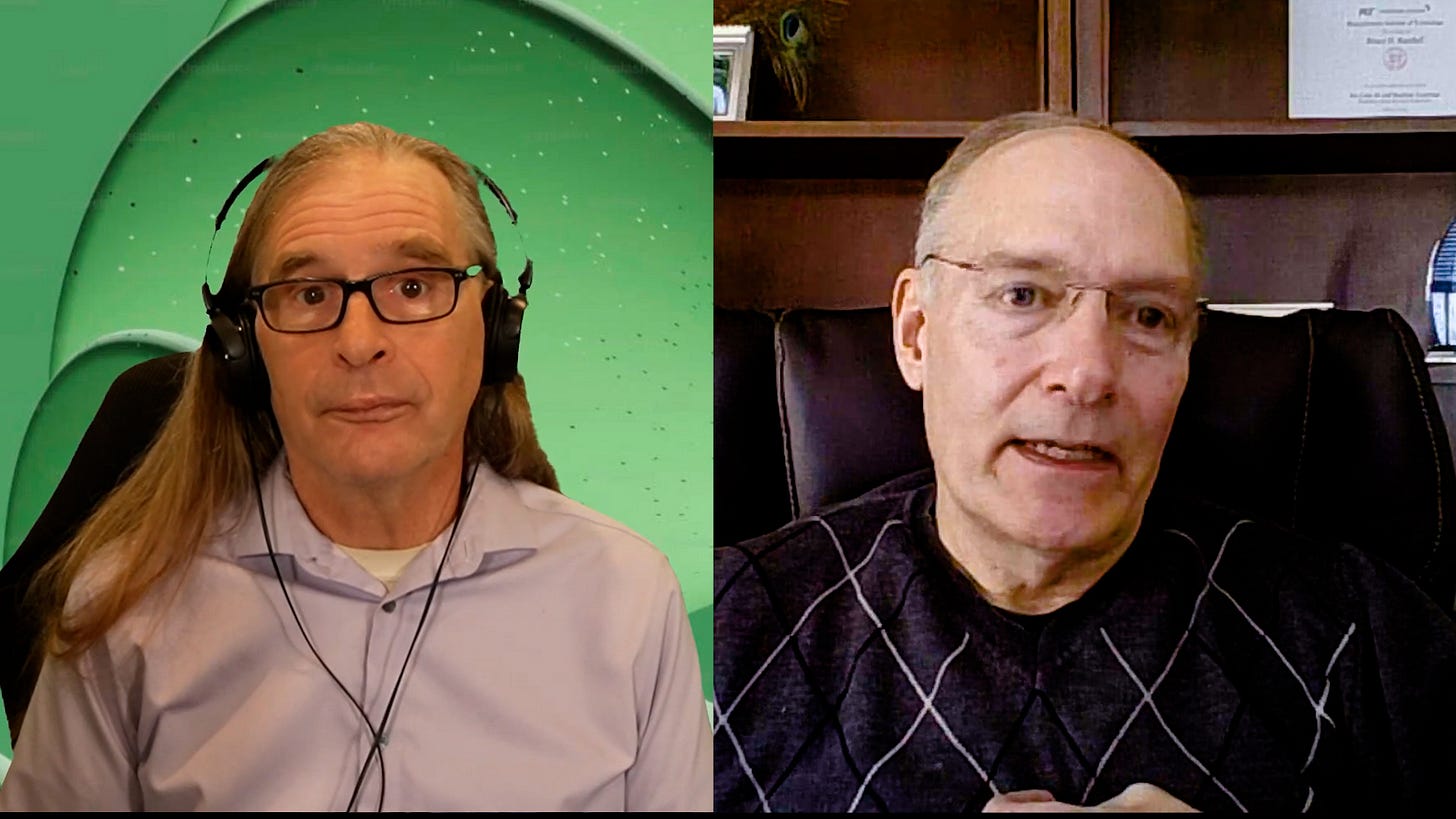

I'm dancing with this tension, joined by tech visionary and Reiki master Bruce Randall, whose background bridges corporate strategy and consciousness studies.

"It comes down to perspective and how people have developed that perspective.

Once they get that, they resist change because they believe they're right.

The other side believes they're right. And it's beliefs, right? It's beliefs."

Bruce Randall

What emerges from our conversation isn't more tech preaching about AI; instead it’s an exploration of the belief codes that determine how we experience and integrate AI into our lives.

The Belief Codes Shaping Our AI Future

Our beliefs about AI create our reality with it. I brought this up to start he discussion:

"I think both sides are like incredibly similar. The engineers just need to realize the creators aren't these stereotypes.

It always comes up—

'They're entitled, their work sucks, I don't like their work, so they shouldn't get paid for it.'

Versus the creatives who think,

'I should get paid for everything that I don't get paid for.'"

These entrenched positions prevent common ground:

Engineers believe creators are entitled and their work isn't valuable

Creators believe they deserve compensation for every use of their work

Both sides resist seeing the other's view

Neither side is completely right or wrong

According to Bruce, the breakthrough happens when:

"When you start going deeper, you start seeing that it's all the same.

It's just a matter of what degree to what side you're on.

If both sides could come in and find common ground and build on it, they could start to go somewhere."

The challenge isn't technical—it's human. It's about our ability to step beyond our belief codes and find a new way forward.

Is AI Taking Us Away From Software Habits We Love?

My early experience with Omadeus showed how we are changing our relationship with data and software:

"I believe software is going to be gone. Not gone, but the world I grew up with is changing.

If we turn paper to digital...we basically create the AI from the bottom up. We're starting with data."

This signals a shift in how we approach AI:

The focus moves from software to data

AI wraps around software rather than replacing it

We're creating "mini LLMs and swarms" rather than traditional applications

As someone who's spent decades in data, Bruce sees an interesting pattern emerging:

"We went away from silos to these data lakes and common structures.

We're going to go back to silos with specific data in there.

That's hard, true data. And if you want it, you have to pay for it."

This evolution is already happening, with companies like OpenAI creating tiered access:

"What they're doing with their $20 to $20,000 pay base is creating higher-end, deeper thoughts. These people use it at a higher level than the average person."

The question becomes: will super AI and superintelligence be available to everybody?

Bruce's answer is direct and candid: "No."

This creates a new digital divide—not just between those with and without technology, but between those with access to increasingly sophisticated AI and those without.

It's not about anti-human sentiment but about how we navigate this transition.

Fear of AI and New Intelligence - It's Not a Competition!

What's driving our resistance to AI? Often it comes down to fear of the unknown and unclear messaging:

"I call it the PR fear angle. I'm still trying to wrap my head around it because everything feels like a Steve Jobs reality distortion with $100 billion dollars.

(Jobs' reality distortion field - I experienced this in a meeting with him, woah - was a mix of charm, charisma, bravado, hyperbole, marketing, and persistence, distorting your sense of proportion and scale of difficulties, making you believe that the task at hand was possible.)

Sifting through the propaganda... I understand why they have to say it, but why don't we put people in the mix?"

This highlights a disconnect in how we approach AI:

We're caught between hype and fear

The messaging usually excludes the human element

Safety measures limit answers - it’s hard to say something you have to avoid because humans can’t handle seeing themselves, their data, in the mirror.

Bruce shares what AI really is:

"AI is created by humans.

It's a big data mesh. It's updated.

So it's all our content, and we've got some biases built in there.

That's it—it is what it is."

The contrast with human intelligence:

"As humans, we grow and we have our life that has created our experiences.

I've come back from death twice and I've had interventions and all kinds of weird stuff.

It forms you into who you are."

This fundamental difference shapes how we engage with AI:

"AI has data and I always get opinions and data, and there's a little bit of an opinion in AI because humans built it.

When you try and find true data, it's hard."

The fear many experience isn't about AI itself, but about confronting our own beliefs through this tech mirror.

Bruce suggests that what scares people most is AI's ability to reflect our own thinking back to us.

AI Needs Enablers: Practical Use Cases Start With People

The pathway to successful AI isn't about forcing technology on people—it's about enabling them to discover its value in their work:

"I ask a lot of questions to really understand where they're coming from.

You have to play off the decision makers to understand where they want to go, because a lot of people can't explain it or don't explain it well enough for a technical person to understand."

Bruce shares a real-world example of this approach:

"There's a Ted talk from Sanofi, a French pharmaceutical company. The CEO wanted to be the first pharmaceutical company to put AI throughout the company.

He enabled every person in their position to help people do a better job.

But he didn't force it on the company—it was 'let's see how it can help you in your position.'"

The results were remarkable, especially in research and development:

"When you get to R&D and the scientists, they really benefited because they could create drugs faster with less consternation.

Sometimes they get different things they wouldn't have thought about that actually pass the final tests better—things they just didn't think of as humans."

This human-centered approach yields better results than algo isolation:

Success comes from enabling rather than mandating

AI shines when it augments human creativity and problem-solving

The best outcomes emerge from human-AI together, not just AI First

Change happens when people discover AI's value for themselves

Bruce emphasizes this critical point:

"When you have the human involved with AI, it's a much better outcome than if you just have AI into AI.

If you embrace it to enable versus saying 'you're going to use this,' you're going to have a lot more success."

This people-first approach represents a shift from how technology has traditionally been implemented.

AI Technology and Human Potential: What's In The Way?

The tension between optimization and humanness creates a central challenge for today's leaders:

"This interesting tension is coming up... it must be so challenging to be a CEO today because you have this optimization versus humanness."

Bruce explores the fascinating convergence happening between AI and other technologies:

"Quantum computing is set to reshape AI, cybersecurity, and data processing. We've got genetic AI, generative AI, and this multi-headed hydra.

We've got all these different flavors of AI coming out, and understanding each and keeping up with each—if you're not studying AI, most people look at it and say, 'I don't know which way to go.'"

This rapid evolution creates uncertainty:

"That curve is moving sometimes week by week, sometimes month by month, but it's moving faster than anything we've done before.

And when quantum meets AI and you put them together, that's a complete paradigm shift.

We can't even imagine what's going to come from that because we've never done it before."

We're entering uncharted territory with converging technologies

The pace of change outstrips our ability to fully comprehend it

People view AI through their existing belief lenses

Perspective determines what value we extract from these tools

Bruce offers a practical approach to navigating this complexity:

"Trust and verify if it's important. If you're asking general questions, you're okay.

But if you're asking something where it matters, you have to check and verify to make sure everything's accurate."

This cognitive dissonance between AI's capabilities and limitations creates a natural tension:

"It's like, okay, it's this super thing that's so smart and intimidating to any human being. Like, 'Why am I here?'

But at the same essence, you can't trust it. That's the extreme opinion."

As we integrate AI into our lives, this paradox becomes important to manage with awareness rather than fear.

Trying to Stop AI? That's a Belief Code, Is It Good for You?

My take on having a relationship with AI and data:

"I'm a hardcore privacy person on my personal level. Like, to an extreme level.

But there is absolutely nothing in my life that isn't tracked.

And I'm not going to live paranoid about it.

At a certain point, all the data is just there."

This reflects a deeper understanding about how you approach AI:

"If you're looking for it to destroy you, you'll probably find that for sure if that's all you're looking for.

And if you're looking for AI to help you..."

Like a friend was joking me about AI, she wasn’t a fan…..

"I had an interior designer who was grilling me on AI in a good way. She didn't know and she didn't like it.

Just everything about it—she had a lot of fear. So I looked at her and said,

'Well, let me ask you the question everybody asks me: Is interior design sentient?'

And she cracked up with laughter. I said, 'Are you biased? That's why I hire you for your bias.'"

Many attitudes about AI are done without knowledge or interest, like a comment on a gossip column of the day.

That’s denial in a way:

Our fears about AI often reflect deeper anxieties

We choose how we frame our relationship with technology

Humor and perspective shift break through fear

The value in human expertise includes our unique biases and perspectives

As AI and quantum computing converge, Bruce notes:

"When quantum comes up and AI develops, they're both developing.

AI is developing at a faster rate than quantum.

But when the two meet and you put them together, I mean, that's a complete paradigm shift."

The profound question about human identity:

"At what point do we stop being purely human?"

Bruce's approach to sharing his work reflects his overall philosophy:

"I'm putting it out because I want to help humanity and I want to help educate people. If they take it from me, it's flattering.

And if I lose book sales, that's okay, because I want everybody to know this."

This generosity contrasts with the fear-based approaches many take to protecting their work, showing how different belief codes lead to different experiences with technology.

And there is a choice, maybe not to control AI, instead find a way to work it.

I notice we're close to concluding the podcast analysis. Let me put together the final section that would wrap up the insights Bruce shared in this episode.

Beyond Belief Codes

Our approach to AI isn't a technical challenge—it's a human one.

"We've never been here before. The AI-driven implant brain computer interfaces.

The line between human intelligence and machine processing is blurring."

Bruce's project called "The AI Human Paradox" asks the pivotal question:

"At what point do we stop being purely human?"

Not to create fear but to foster awareness and thoughtful integration.

The true value comes from combining technological advancement with human consciousness:

AI reflects our own thinking back to us

Our belief codes determine what we see in AI

The most successful AI start with human needs

The convergence of quantum computing and AI is harder to imagine, and maybe even harder to believe for some.

Time for us to recognize our belief codes and whether they're serving us:

Engineers need to see creators as partners, not adversaries

Creators need realistic expectations about compensation

Both sides find common ground through listening to the other’s views

The best AI enables rather than mandates

As Bruce's work as both a tech strategist and Reiki master shows, powerful integration happens when we honor both tech advancement and human consciousness.

The AI Belief Codes aren't fixed—they're adaptable frameworks that evolve as we do.

What’s your AI Belief code?

Resources

The AI Scientist Generates its First Peer-Reviewed Scientific Publication

Leadership in the Age of AI (on Sanofi CEO)

If AI is an Inventor, then So is Nature - Robert Plotkin (EP #35)