The AI Bubble Finally Burst - And It's the Best Thing That Ever Happened

Introduction: When the Hot Air Finally Escaped

Picture this: TechCrunch Disrupt 2024, and the first sign I saw was "Stop Hiring Humans." Who exactly is going to adopt AI with that message?

If you've been following the AI hype train, you've probably noticed something shifting lately. The algorithms are suddenly filled with Sam Altman fans quoting him saying "yeah, I guess it's over."

The AI bubble has finally popped. ChatGPT-5 came out, and honestly, the AGI reasoning promise isn't even real. The pipe dream is gone, and for you and me, this is the very best part.

I wrote about this back in July 2024 - "The AI Bubble Burst" became one of my most popular episodes because people understand that the hype and hot air are getting in the way of creativity. The whole message was that AI was going to take your job. Sign me up for that motivation, right?

We've been living in some male-engineered science fiction fantasy that AI is just going to go all Terminator on us. Do you wonder why adoption is slow worldwide? Why people aren't paying for it? It's because we've been sold fear instead of partnership.

But here's the thing - this crash brings AI down from the ivory tower billionaire pitch fest. It shows us how we can work WITH AI as a tool, how it can actually work with you, not against you. As my guest Maya Ackerman said last week, it's about being co-creative with it.

The AI Bubble Bursts: A History of Hot Air and Broken Promises

"A lot of the people saying AI first really don't have a high opinion of human beings. And I'm not talking about their visions. I'm talking about yours."

Let's take a trip through the greatest hits of AI prediction failures. This isn't about being negative - it's about recognizing patterns so we can build something real.

Back in 2015, Elon Musk predicted driverless cars within a few years. Then 2019. Then 2021. We're in 2025 now. It's coming, but not as fast as predicted.

Geoffrey Hinton, the so-called Godfather of AI, said radiologists would be gone in a few years - that was 2017. I checked recently. They still have jobs.

Mark Zuckerberg pitched the metaverse for years and lost an estimated $45 billion because it didn't work.

Listen to Satya Nadella talking about the metaverse - it sounds exactly like current AI pitches, just with different buzzwords. They literally took out "metaverse" and put in "AI."

The pattern is clear: we get sold on revolutionary transformation, but reality moves at its own pace. The difference between hype and progress isn't just timing - it's approach.

AI Myths Revisited: Why This Time Feels Different

We've heard this song before. Every major tech shift brings promises of instant transformation. But AI feels different because it touches creativity, thinking, and decision-making - the stuff we thought was uniquely human.

The myths we've been sold include AI doing "everything for everybody" instead of focused tasks. We've been told to create guardrails instead of limiting the overwhelming amount of stuff we're expecting it to do. The goal seems to be "replace everybody" and "stop hiring humans."

But people are working with AI secretly, like it's some scarlet letter. Don't say it's AI. It's become this weird, detached thing when it doesn't need to be.

Key Points:

Pattern recognition shows AI hype follows historical tech prediction failures

Current messaging focuses on replacement rather than partnership

Real adoption happens quietly, person by person, project by project

Trickle Down AI: Why Top-Down Implementation Fails

"Time after time again, C-suite executives sit there in their little meetings, separate from the employees, in this hierarchical 'I'm up here, you're down there' job model.

Telling them to use AI without considering to those working for them, AI means replacement."

Here's what happens in many companies: executives decide they need AI. They hand it down to their teams with no real goal, no central focus, just "you guys figure it out."

Meanwhile, employees have heard that AI is going to replace them and maybe kill them. Yeah, they're really excited to make that happen faster.

This creates a weird dynamic where AI becomes evil because it's going to take jobs. People may work with AI, but they keep it secret.

Executives are doing the same thing - working on their own AI projects privately because admitting you're using AI feels like admitting weakness.

The trickle-down model says the head decides to do it all, and everyone else follows. But people are sabotaging it, even unconsciously. They're stopping progress because the whole narrative is backwards.

The Real Foundation: Data and Communication

Leaders need to start with people at the beginning. You need to start with your data, which is dormant, not organized, and hard to communicate with. Communication and organization are the core of useful AI. They build their way up.

Instead of AI trickling down from the top, it should be bottom-up. You need to invert this platform because that trickle-down approach isn't working. People aren't going to accelerate their own replacement.

I talk to small businesses that have been sold ten AI agents doing different things, but they don't do them well. They need updates and tuning. People are buying AI like it's software - out of the box and working. That's not how it functions.

Key Points:

Top-down AI implementation creates resistance and secrecy

Real AI success starts with data organization and communication

Bottom-up approach builds trust and actual functionality

High Costs, Low Revenue: The Business Model Problem

"The business model of AI is flawed. It doesn't have one yet."

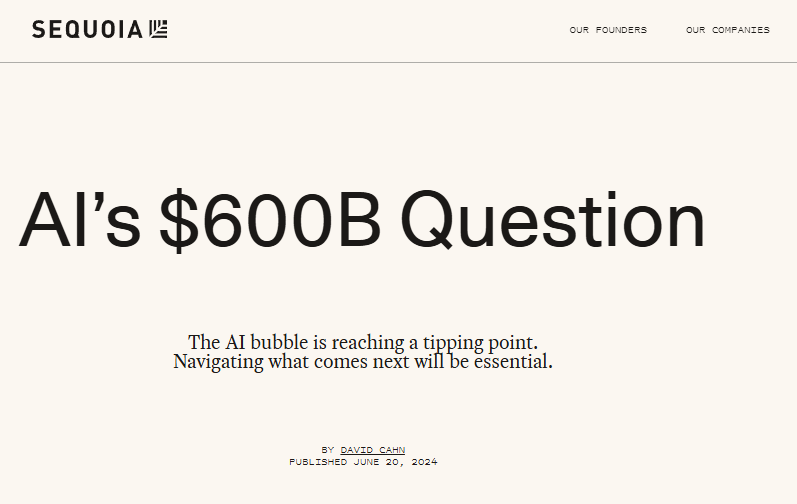

Let's talk numbers. Sequoia came out with their $600 billion article this week, and people were freaking out about how much money AI companies need to make to reach that goal. It's huge, and it's not going to happen overnight.

The business model has way too many costs. When you ask ChatGPT-5 a reasoning question, it takes massive resources.

Jeffrey Funk, who predicted this crash before anyone did, has shown how much these things cost in tokens. A simple chatbot runs 50-100 tokens. But once you start reasoning, costs get astronomical.

Have you noticed on Claude they now shut users down to five-hour limits? In the early days, with lots of money, they let people keep things open. But they're burning cash. You're seeing AI shrinkflation - same package, but smaller portions.

The Revenue Reality Gap

ChatGPT needs way more money to become profitable. They originally planned to sign off with Microsoft when they achieved AGI, but nobody can even agree on what AGI is or why it matters. This is going to look silly in a few years.

Companies are building data centers the size of New York City, using massive amounts of potable water, buying wickedly expensive Nvidia chips. The math doesn't work. Market realities are taking over.

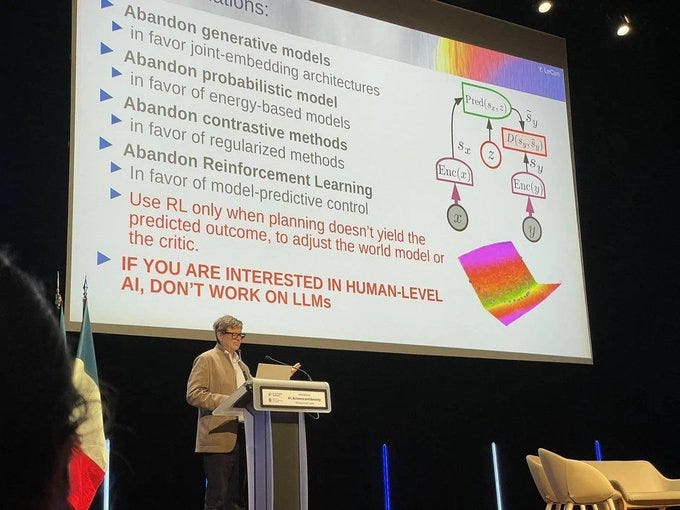

This doesn't mean AI is going away. But in a crash, we don't need five large language models doing everything. We need small language models addressing specific goals.

Key Points:

Current AI costs are unsustainable for the value delivered

Revenue expectations don't match market reality

Small, focused models make more business sense than universal solutions

The 3 AI Problems Hiding in Plain Sight

Problem 1: The Revenue and Reality Gap

The first problem is obvious when you look at the numbers. AI companies need massive revenue to justify their investments, but adoption is slow and people aren't paying premium prices. Goldman Sachs released a report showing how few companies are actually adopting AI effectively.

You talk to companies and the really advanced tech ones can make it work. But most companies are sitting there thinking the support isn't reliable, it's not trustworthy, and they're trying to make it do too much. This is early-stage technology being sold as mature solutions.

When the biggest players like Goldman Sachs, Sequoia, and Sam Altman start saying it's not working as promised, people listen. But we don't need to wait for them to give us permission to build something better.

Problem 2: The One Model Trap

"One model to rule them all. We just need ChatGPT. Oh wait, DeepSeek is just as good and cheaper. Oh wait, Anthropic's great. Oh wait, Mistral does really cool stuff."

We're seeing the same mistake IBM made in the 70s - believing there would be one computer to rule them all. Sounds silly now, doesn't it?

That's exactly where OpenAI and other AI companies are heading. They're trying to be the moat, the single solution to control it all and make the money.

The reality check is different. There's so much you can do if you keep small and focused. Foundation to small language models building their way up.

That's why they don't hallucinate - because you don't ask them to do too much. That's why they're trustworthy - because you don't let them do things they shouldn't do.

Even Klarna, which made headlines for wiping out customer support, quietly brought back employees. Nobody talks about that part.

Others are doing the same because AI doesn't have that capability yet. And why should it?

Problem 3: The "Set It and Forget It" Myth

"Would you hire somebody without training them? Without setting clear performance goals? Without checking their work?

Why do we do that with AI?"

The third problem is treating AI like traditional software. You wouldn't hire someone without training them, setting performance goals, and checking their work until you trust them. But that's exactly what people do with AI.

You need to customize AI to your business. That's the cool thing about AI - instead of learning software and hoping your team adapts, your business becomes the center and you customize AI to fit your solution.

Your business is dynamic. It changes. Consumers buy new things. Markets shift. Things go out of fashion. We react to that constantly, but we think AI will handle it all automatically. That's wishful thinking.

Key Points:

Revenue expectations exceed realistic adoption timelines

Multiple specialized models work better than universal solutions

AI requires ongoing training and customization like any team member

AI as Employee, Not All-Knowing Sage

"It's not how smart it is. It's how much you can trust it. It's not that it's doing things you can't do.

It's that you can do things reliably and take work off your back."

Stop thinking of AI as some mystical intelligence that knows everything. Think of it as a new team member who's really good at specific tasks but needs clear direction and ongoing training.

As a creator, understand your own creative process first. Document it. Find areas where AI can help.

In business, start with your workflows - small ones. Automate around them gradually. Build them to where you can trust them. Develop measurements and check in quarterly.

Don't just set it and forget it. That's the weird, lazy thing nobody would do in business, but somehow AI comes along and we think it's different because "it's smarter than me."

Focus on Organization and Communication

If you do nothing else, focus on organization and communication. Humans aren't naturally good at these things.

AI is wickedly good at them, and you don't make it in charge of your business. It's just organizing things better and making sure everybody is on the same page.

Instead of forcing your existing tools to handle communication (some do well, many don't), streamline with AI.

But start there - it's real, practical, measurable, and you can develop AI you actually trust.

Key Points:

Treat AI like a specialized team member needing training and oversight

Focus on organization and communication as primary AI strengths

Build trust through small, measurable improvements over time

The Beginning is Near: Why This Crash Changes Everything

"The future belongs to the builders, not the believers. The future belongs to people who sit down and make common sense decisions."

Now that the crash is starting, the beginning is here. Get excited. The path forward is with us, not against us.

We're at a creative crossroads with AI, and most people think it's either creative salvation or creative apocalypse. I believe it's neither.

AI is a humble creative machine that becomes powerful when you know how to work with it, and it knows how to work with you.

The most powerful technologies we've ever had come when we prioritize how people use them, not when we prioritize the technology as some all-encompassing solution.

The AI crash isn't going to destroy creation - it's going to reveal what we can do with it. It's not going to destroy businesses - it's going to open opportunities to get beyond the hot air and get down to business. Get down to how it works with us and serves us, not how it takes over the world.

It's About You, Not the Technology

I'm planning on being part of the human revolution - human connection, human co-creativity, taking all that horrible, repetitive work off our backs and giving us time to do amazing human things.

The crash frees us to see what we can actually accomplish. Small language models building from the bottom up make sense.

After all, you don't build a house without starting with the foundation. The foundation is people and their data, not some CEO's vision from the top floor.

We don't need to put guardrails on AI - we need to limit the overwhelming amount of stuff we're expecting it to do.

We need tools that don't do everything for everybody, but that focus on specific tasks. We need to work WITH AI creatively instead of being slaves to technology.

Trust your voice. Amplify with AI. The crash isn't the end - it's the beginning of something much better.

Key Points:

AI crash reveals realistic, practical applications over hype

Human creativity and AI capability work best in partnership

Small, focused steps build more value than grand transformations

Success comes from prioritizing people over technology

The AI bubble burst isn't a failure - it's a liberation. Now we can finally build AI that works with human creativity instead of trying to replace it. The future belongs to those who understand that the most powerful technology serves people, not the other way around.

AI Bubble Pops Resources

“AI is in a Bubble:” OpenAI CEO Sam Altman compares AI hype to the Dot-Com crash

GEN AI: TOO MUCH SPEND, TOO LITTLE BENEFIT?

Goldman Sachs: AI Is Overhyped, Wildly Expensive, and Unreliable