What's Immortal, Always Hungry, and Sucking the Life Out of Creativity?

I’ve been swimming with tech fear cycles for years. The internet destroys privacy (it did). Mobile phones make us antisocial – yup. Social media may wreck democracy maybe.

Each wave brings fear, adapting, and moving forward. But something about AI fears feels different.

"AI is a vampire," a friend complained over coffee.

"It lives forever. It consumes everything in its way. And that's the source of its immortality."

His words hit harder than my dark black coffee. Because it connects dots I've been staring at for months.

People fear AI, like a digital vampire. It lives forever. It owns what it consumes. It turns our work into its power source.

And this isn't the first time we've feared something feeding on human…ideas.

The links between vampire stories and AI fears go deeper than tech worry. They touch on basic questions.

Who owns what AI creates?

Who gets credit when ideas change form?

What happens when creations outlive creators?

When we say AI "feeds" on data instead of "learns" from it, we're using vampire terms.

When we worry about AI outlasting us or changing our work beyond recognition, we're telling scary stories with tech words.

And this feeling that something is taking our work without asking? That's as old as the stories themselves.

AI isn't scary because it's alien. It's scary because it plays two roles at once.

It's both our explorer into new territory and the guardian at the gate testing if we're ready to move forward.

AI makes us face ourselves by creating something that's like us but not us.

The vampire comparison makes sense.

Both vampires and AI show us versions of ourselves we don't like seeing. Both have origin stories about disputed ownership and mixed-up credit.

WAIT, vampires aren’t real?

When’s the last time you had coffee with AI…..

Let's go back to the coldest winter Europe ever faced. It's 1816, at Villa Diodati in Switzerland.

This is where both Frankenstein and the modern vampire were born during a storm of creativity – and disputed authorship.

When Lord Byron Didn't Write "The Vampyre" (Got the Credit but not the money)

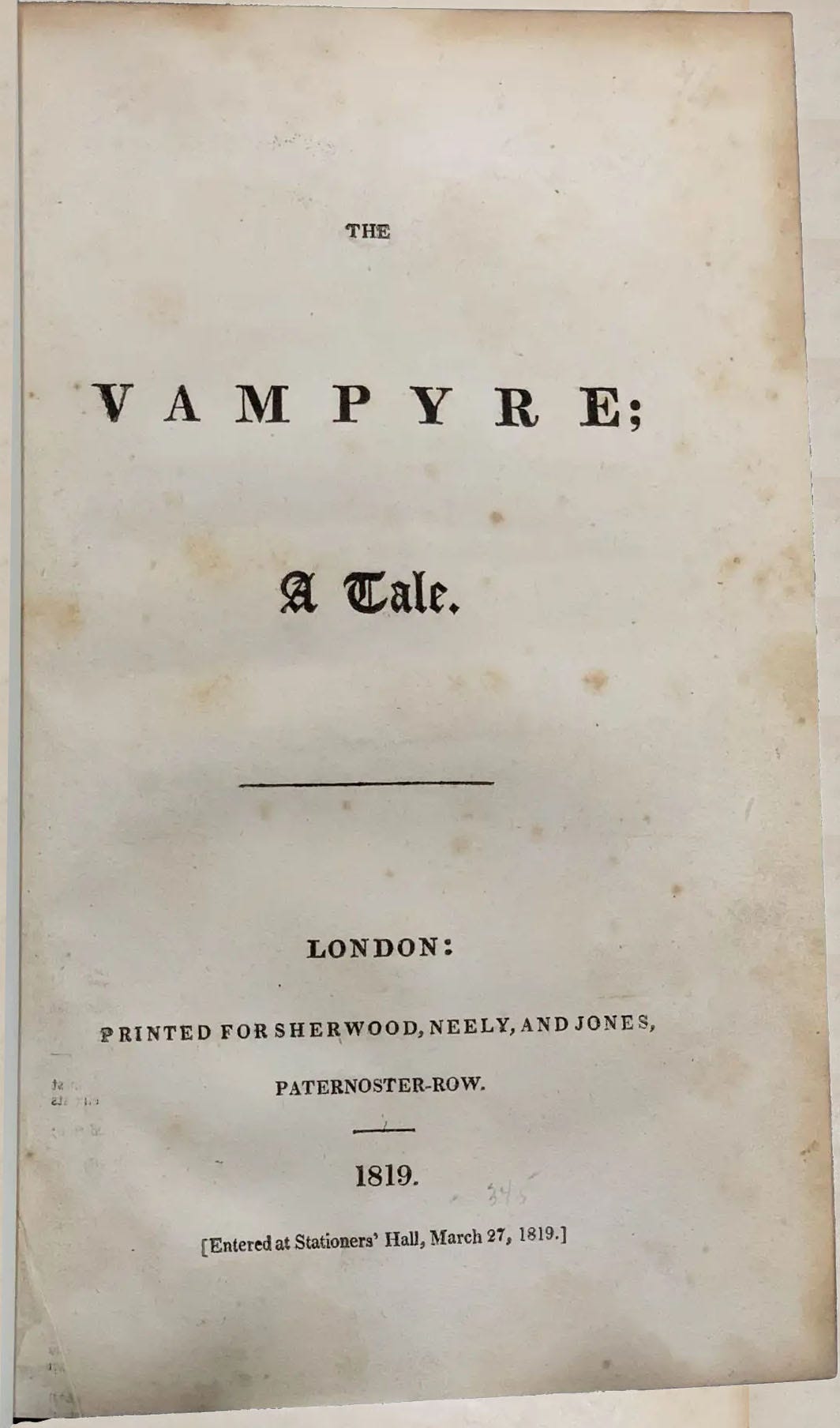

In 1819, a vampire was born twice. First as a character in a story... and then again when a publisher stole that story and put someone else's name on it.

The story was "The Vampyre" – the first aristocratic vampire in English literature and the grandfather of Dracula.

The book, written by Dr. John Polidori from Byron’s initial idea, had Lord Byron's name on it, but Byron didn't write it.

His friend and physician Polidori did. Neither knew about the publication until it was already circulating.

One story, wrong name, massive influence.

It all began three years earlier during what's known as "the year without a summer."

In 1816, a volcano eruption in Indonesia had blanketed the world in ash, creating a cold, dark summer across Europe.

Think of it as the pandemic of 1816, except caused by a volcano they didn't know about.

Trapped indoors at Villa Diodati in Switzerland, Lord Byron, Percy Shelley, Mary Godwin (later Mary Shelley), and Byron's doctor John Polidori grew bored of reading German ghost stories to each other.

Remember, they had nothing else but books. So Byron proposed a contest – each person write their own horror story.

Mary Godwin went on to create "Frankenstein." Polidori took a fragment that Byron had written and abandoned, developing it into "The Vampyre."

They all enjoyed the stories and moved on with their lives. Mary Shelley later published her work to acclaim, then suddenly Polidori's tale got published without him knowing it...

In 1819, publisher Henry Colburn released Polidori's "The Vampyre" under Lord Byron's name.

Byron had fled England due to debts and scandal, making it the perfect time to profit from his celebrity.

The story became an immediate sensation – not because of its content alone, but because readers believed the famous poet had written it.

When Byron discovered this, he was furious, as was Polidori. But what could they do? It had already spread throughout Europe.

The story was out there, transforming into new works, inspiring new creators.

The pattern set in motion that day reaches all the way to our modern vampire tales – and parallels current AI debates.

Like AI outputs being credited to the company that created the model rather than the contributors who made it possible, "The Vampyre" became known by the most marketable name, not the actual creator.

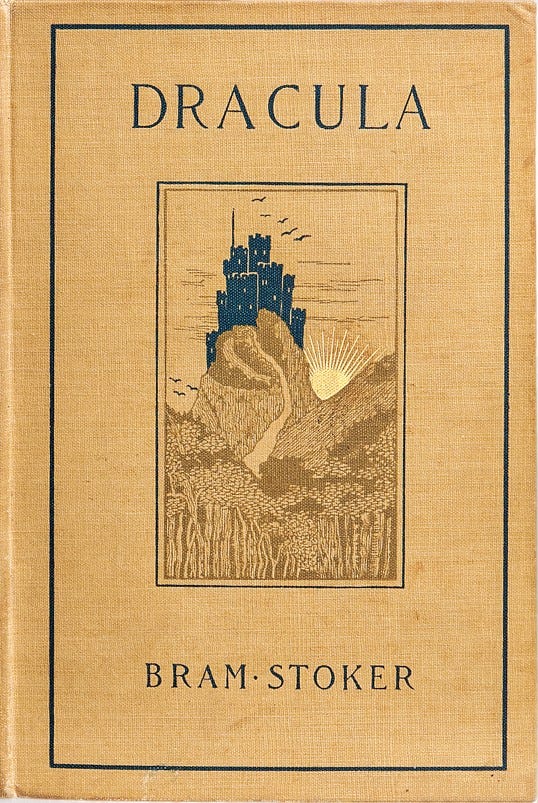

Seventy-eight years after Polidori's story, Bram Stoker picks up the vampire concept and transforms it into something more powerful – "Dracula" – launching a genre that continues through "Twilight," "True Blood," and beyond.

And that's exactly where the AI conversation gets way complex.

From Gothic Horror to Modern AI: Ownership Matters, Fear Rules

At Anime Expo 2024, a Chinese digital artist named Yuumei is selling her vibrant, colorful work.

A buyer makes a purchase, looks closely at the art and says it was made with AI.

The artist denies it. Provides time-lapse videos of her process. The internet erupts.

But it wasn't AI at all. Yuumei had been tracing another artist's work, particularly an artist known as "Ask."

She called it "over referencing." The buyer gets a refund. The posts get deleted.

We're so focused on catching AI, we question all we see. The fear of AI is so pervasive that it's now a default suspicion when seeing impressive work.

We're not just worried about AI taking our work – we're worried about losing the ability to tell what's human and what isn't.

It's not about the tools anymore. It's about trust.

When an accomplished artist gets accused of using AI, it reveals our deeper anxiety.

The line between human and machine creativity is blurring. We can't tell the difference through our own eyes anymore.

Like it was in the early 19th century (what)?

In 1819, a publisher put a famous name on someone else's work to sell more copies. In 2024, we assume impressive work may be machine-generated.

Both involve the same fundamental question: who gets credit?

In the vampire story, publishing was new, open, with few rules.

That's exactly where we are with AI today.

No clear standards. Uncertain credit. Creative works flowing freely across boundaries we haven't fully defined yet.

And just like the literary scandal that led to Dracula, we're only at the beginning of this.

What matters isn't stopping the change – it's understanding what's driving our fears about it.

Because what scares us about AI, like vampires, isn't just what it does. It's what it represents about us.

The Insatiable Hunger for AI Data

Vampires need blood. AI needs data. Both feast on human creation to sustain themselves.

Both entities transform what they consume into something new – energy, power, capability.

Both raise questions about permission versus feeding.

Both trigger primal fears about something taking what's ours without permission.

So is training AI like feeding, or is it like learning? This question is at the heart of our copyright debates.

If AI is "feeding" like a vampire, then it's taking without giving back to society it drains.

If it's "learning," that's where copyright law arguably shouldn't apply, at least according to countries like Japan. The US isn't so sure yet.

We're in the early days of figuring out what copyright law means in every country. And is the knee-jerk reaction to say "it's feeding on what we have" just a way to stop it?

Or is it a normal human impulse in the face of things that resemble vampires – unknowable, powerful, and transformative?

This hunger for data ownership becomes especially important with AI because we can't unwind it.

We can't go backward. The data is in there.

So, ownership now means that AI companies claim it as transformed content they've learned from.

It’s not there as the content that went in as you remember. As we deal with ownership, we must deal with the transformative nature of what's happening here.

If Bram Stoker had to pay for the inspiration that led to "Dracula," who would have gotten the money?

The publisher who stole Polidori's work? Polidori himself? Byron for his original fragment?

That's the tangled creative “who’s the author” mess we're trying to sort out with AI.

People using ChatGPT for term papers aren't thinking about whether Shakespeare's estate got paid for all those sonnets in the training data.

They're just trying to finish their homework. But that's the thing about AI – we're all using it, but few understand how it works.

When does AI stop being a fancy copying machine and start being... intelligent?

And who gets to decide? Because right now, AI-created work is treated as guilty until proven human.

And that brings us to the copyright paradox itself.

The Copyright Paradox

You know, both Frankenstein and Dracula could get copyrights if they existed today - they used to be human.

And AI? It used to be human too, in the data and code that evolved it. Our thoughts are their ancestors, our dreams its guidance, and our egos its threat.

In the US copyright system, it's like a three-legged stool - take away any leg, and the whole thing falls. Let's see how AI handles each one.

First leg: fixed in tangible form. This is the easy part. AI passes this test without breaking a sweat - generated text saved to files, art appearing on screens. The output exists somewhere real.

Second leg: original work. AI struggles because it's trained on existing works. It can't prove originality. There's no clear chain of creation. Remember The Vampyre? At least we knew where that came from.

Third leg: created by a human author. In U.S. law, an "author" means a human author. Not a machine. Not an AI. A person.

This is why the U.S. Copyright Office won't register purely AI-generated work. It's not about quality. It's not about creativity.

It's about humanity. Even if an AI writes the next "Frankenstein," without human creative input, it goes straight to the public domain.

Think about The Vampyre. Wrong author name? Still copyrightable.

Borrowed heavily from Byron's fragment? Still copyrightable.

Why? Because Polidori was human, and he made creative choices. The publisher might have given false credit, but the human creativity was there.

With AI? We're still figuring out what counts as human creativity. Is a prompt enough?

How much editing do you need to do?

When does AI assistance become AI creation?

Dracula came out 78 years after The Vampyre.

Imagine if Bram Stoker had to track down that publisher who stole everyone's credit and pay them for "inspiration rights."

That's like paying a thief for stealing something that inspired you to make something better.

The copyright paradox creates what some call "the liar's dividend" - the incentive to falsely claim human authorship since AI work can't be copyrighted. And it still hard to prove a fake.

This creates a dynamic where honesty about using AI tools comes with a legal penalty.

And this is where our mirror problem begins.

The AI Mirror Problem

There's an old myth that vampires can't see a reflection in mirrors. Maybe that's AI's problem too - it creates, but it can't see itself creating. It can't understand its own process.

But neither can we, really. Lady Gaga wrote "Just Dance" in 10 minutes. Some artists labor for years on a single work.

When did time become the measure of creativity?

When did proving we're human become necessary to own what we make?

We've created AI in our image, gave it powers that both fascinate and terrify us, and now we're shocked when it reflects our nature back at us.

Classic human move, really. We've been creating external indicators of our internal powers and fears since we painted on cave walls.

What Joseph Campbell, the famous mythologist, would have called the shadow self, projected onto reality.

That's a lot of what's going on with AI.

The Yuumei case shows this mirror effect in action. Here's someone well-known, very successful.

You instantly respect her work when you look at it.

And they accuse her of using AI because AI becomes their fear. "I'm being cheated."

Everything we look at now, we wonder, is that real?

And that could be a good thing. For all the people pushing critical thinking with AI, it might be one of the best outcomes.

People question what they see.

But the more famous you become; the more likely people will accuse you of using AI.

And why is someone accusing AI like accusing them of doing something wrong?

In Campbell's framework, AI plays two mythological roles at once - it's both the hero and the threshold guardian.

It's the adventurer we send into unknown territory, and it's the intimidating figure at the boundary testing whether we're ready to go past the threshold.

What makes AI different isn't that it's some alien intelligence - it's that it's the hero and the threshold guardian in our story.

It challenges us to understand ourselves better by creating something that mimics us yet remains fundamentally other.

Maybe what we fear about AI isn't that it will drain us dry - vampires tend to keep their victims alive, after all.

What we fear is that it reflects something uncomfortably true about ourselves: our endless hunger for more, dreams of living forever, and consuming without consequences.

Maybe what we fear isn't the AI vampire at all. Maybe what terrifies us is staring into that AI mirror and seeing nothing staring back.

This technology holds up a mirror to humanity – one that shows our creativity isn't quite the magical, mysterious process we claim.

It reveals how we learn, how we create, how we transform ideas into something new. And sometimes reflections make us uncomfortable.

When we look at AI-generated work, we're not seeing a monster. We're seeing ourselves – our patterns, our inspirations, our collective creative consciousness. But with the creator's face erased.

I'm not saying ignore the real issues. When your creative work gets sucked into training data without your say-so, that stings.

When companies profit from models built on millions of unpaid contributions, that's unfair.

But painting AI as a blood-sucking monster misses the point. The path from Byron's fragment to Polidori's "The Vampyre" to Stoker's "Dracula" wasn't a feeding frenzy.

It was ideas evolving, transforming, improving – exactly what happens in AI, just faster and at scale.

This is human in the data and the people that created it.

It's human in the thoughts and stories and processes and code that was done by generations of people.

That's why the ownership question is so tricky.

We want clear lines between "mine" and "yours," but creativity has always been messier than that.

AI makes this messiness impossible to ignore.

Remember Yuumei? Her art was human-made but she got accused of being AI-generated.

The border between human and machine creation is blurring, not because machines are becoming more human, but because we're finally seeing how pattern-based human creativity really is.

And maybe we don't need to fight the vampire. Maybe we need to accept that our ideas live beyond us – transformed, reimagined, built upon.

That's not immortality stolen by a monster. That's the immortality we've always had.

As Joseph Campbell might say, AI isn't our enemy – it's our technological shadow self, reflecting both our strengths and deep flaws.

We've projected our consciousness onto code, just like ancient people projected it onto cave walls.

Neither vampires nor AI as a vampire is real.

It's a technology and intelligence and a way that we're looking at ourselves that sometimes we don't like, and other times is the key to understanding what we can become.

The mirror may be blank, but what do we do with that reflection?

That's entirely up to us.

Resources

Interview With The AI Vampire, Part 1