I remember it so clearly, the Exhibitor’s booth with the simple name Ruth at TechCrunch Disrupt 2024, away from the posturing about AGI and the next trillion-dollar unicorn.

Megs Shah and Sandy Skelaney are doing something different and non-profit – Ruth is an AI app that does not track its users. And helps them use technology to protect themselves.

Ask Ruth - Your Digital Guardian - Here

Delivers trusted information to people confused about harassment and bullying, in danger from abuse and trafficking, and technically exposed in ways they don’t know.

Their creation is RUTH, a digital guardian helping people navigate the dark corners of tech-facilitated abuse.

The story of how RUTH came to be reveals the human side of artificial intelligence, how sometimes the most powerful solutions come from personal experience.

"I was trying to keep up with my son playing video games.

I couldn't figure out what he was doing on there, and I was worried about sextortion.

So I went on to OpenAI and asked how to talk about sextortion with your teenager."

The response? "Sorry, it's out of policy."

For Megs, a seasoned technologist, this wasn't just an inconvenience – it was a call to action.

"The technologist in me didn't accept that answer," she says with a slight smile.

"I was like, oh hell no, this can't be."

That moment of frustration in 2020 led to what would become Parasol Cooperative and RUTH.

The drive to move from AI concept to reality requires more than technical expertise. It demands a deep understanding of the human cost of technology abuse.

Sandy Skelaney, Parasol's COO, frames the challenge:

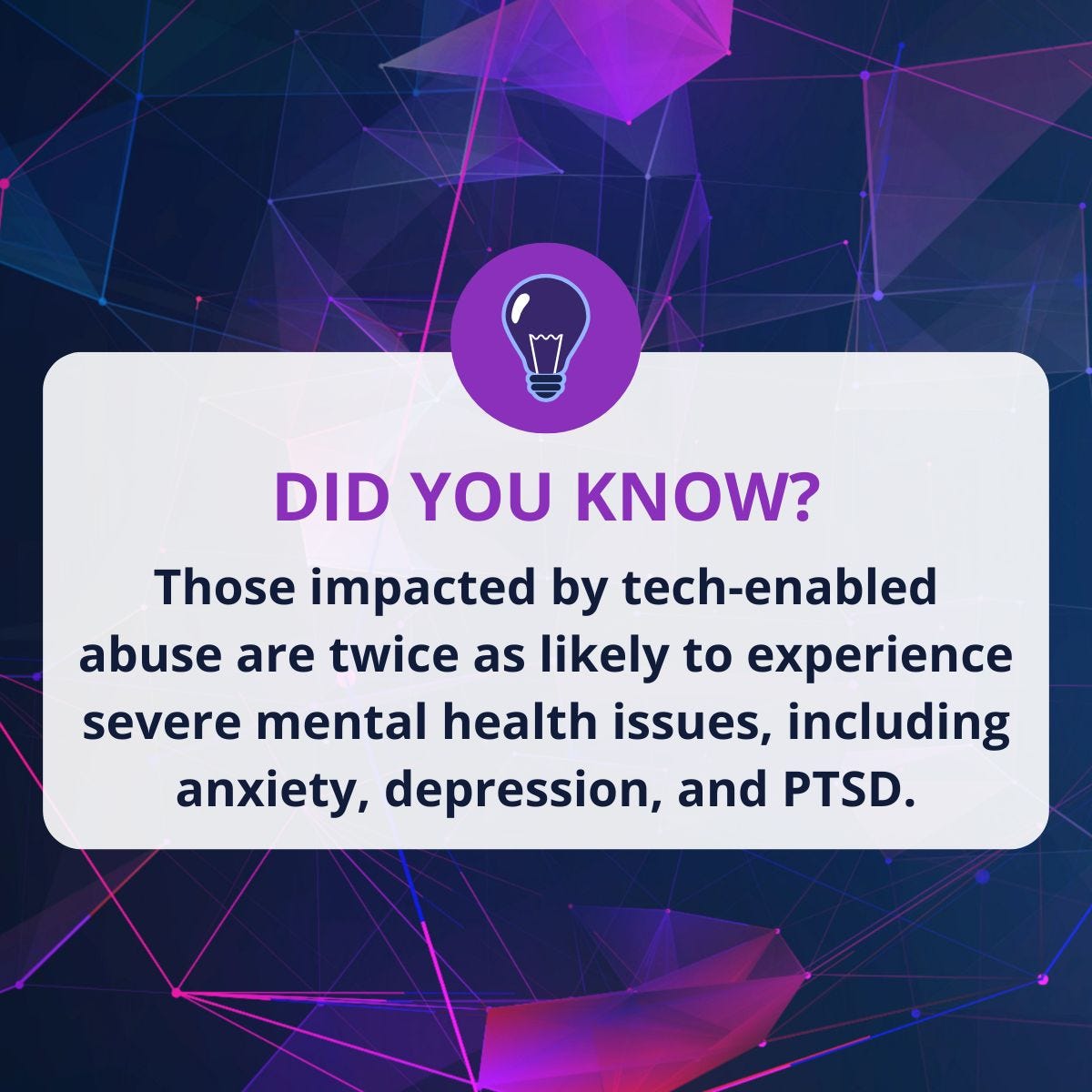

"The field of tech-enabled abuse has grown exponentially.

We're talking about sextortion, deepfakes, revenge porn.

Organizations working with survivors of domestic violence report that 98% of the survivors they're working with are experiencing technology-related abuse."

What makes RUTH different isn't just its focus on tech abuse – it's how it approaches the problem.

Most AI applications harvest user data, RUTH deliberately maintains zero knowledge of its users.

When you exit the chat, your data vanishes. No emails collected, no names stored, no digital breadcrumbs left behind.

"I've been in data for God knows how long," Megs explains,

"and I've been on the side of

'let's collect every possible piece of information we can about a person.'

But being on the survivor side of the mindset now, I've realized that could revictimize people.

The goal for us is ensuring we're not participating in that."

This privacy-first approach isn't just an ethical choice – it's a safety feature.

For people facing tech-enabled abuse, being tracked by another app, even one trying to help, could put them at risk. RUTH's design ensures that they can find help without leaving a digital trail.

Creating an AI that's both helpful and trauma-informed required rethinking how AI should interact with new users.

"Ruth is very clear that they're providing sensitive responses, making sure they're checking in with your safety," Sandy explains.

"But also at the same time,

'I'm still an AI, I'm not a human being.'"

The technical implementation of RUTH reflects this careful, human-centered approach.

While many AI companies rush to fine-tune their models with user data, Parasol made the conscious decision to build differently.

They chose Anthropic's model as their base after extensive testing, appreciating its robust responses and customization potential.

"We could have easily just put a chatbot out there and said, 'hey, try it out,'" Megs explains.

"But we are very much survivor focused. In everything we do, we try to consider:

how would this make me feel if I was the one asking these questions?"

This led to months of careful development between their soft launch in June and their wider release in September 2024.

The team worked on prompt engineering, testing, and validation to ensure RUTH's responses felt more like talking to a supportive friend than a clinical professional.

They focused on breaking down language barriers, essential in making support available to immigrant communities.

RUTH's trauma-informed approach manifests itself in subtle but crucial ways.

Before diving into solutions, it checks if users are in a safe place and whether their devices might be compromised.

It acknowledges the gravity of situations without inducing fear, offering practical guidance while ensuring users maintain agency over their choices.

The privacy architecture is equally thoughtful.

"When you go to our site to use Ruth," Megs emphasizes,

"we do not collect any emails, we do not collect your name.

We even tell you don't give us all this information. We don't need it."

This zero-knowledge approach extends to their development process – they don't even use chat data to improve the model, a stark contrast to standard AI development practices.

Instead of relying on user data for improvements, RUTH uses a sophisticated RAG (Retrieval Augmented Generation) architecture that pulls from carefully vetted sources.

This allows them to update their knowledge base with the latest research and safety information without involving user privacy.

They've built their backend to be modular, allowing them to switch out AI models as better options emerge, while maintaining their strict privacy standards.

The global expansion of RUTH happened almost by accident, but its rapid adoption speaks to a universal need.

From its initial testing phase, the team discovered RUTH was providing accurate resources and support not just in major cities, but in remote locations across the globe.

"We've tested all over the world," Sandy notes,

"in Kandahar, Afghanistan, Algiers – and it seems to be

giving accurate resources and information."

This international reach reveals how tech-facilitated abuse transcends borders and cultures.

In each new country, RUTH adapts its responses to local context while maintaining its core principles of privacy and trauma-informed support.

The team has been particularly moved by how different communities have embraced the tool in ways they never anticipated.

For victim service professionals worldwide, RUTH has become an invaluable ally.

"Advocates are trained in mental health," Sandy explains, "they are not trained in digital safety planning. Being able to give them a tool so that they can sit down and do a case plan with the survivors they're working with... it absolutely supercharges that work."

The feedback loop is designed to maintain user privacy while allowing for improvements. Users submit anonymous feedback about incorrect or unhelpful responses, which the team prioritizes for updates.

This allows them to refine RUTH's responses without compromising their zero-tracking commitment.

"Every day technology is changing and evolving," Sandy notes.

"Every day there's new ways people are finding to abuse.

How do you train it to be trauma informed?

How do you give it the right information in terms

of the intersection of tech and abuse?"

Their solution was to create a system that could be updated rapidly with new information while maintaining the emotional intelligence and safety-first approach that makes RUTH unique.

In less than a year, what started as a tool to help parents discuss online safety with their children has evolved into a comprehensive resource being used by individuals, families, and professionals across 29 countries.

It provides support in situations ranging from basic digital safety questions to complex cases of tech-facilitated abuse.

In a tech landscape focusing on data collection and monetization, Parasol Cooperative is showing that it's possible to build powerful AI tools prioritizing privacy and safety above all else.

RUTH represents a different vision of what AI can be.

Today AI discussions often center on replacing humans, RUTH aims to enhance human connection and support.

It's not trying to be human – it's trying to be transparently artificial.

"Knowledge is power that no one can take away from you," Megs says, summing up their mission.

"And if we're able to equip you with that, then we've done our job right, because we've trained you to be more vigilant about yourself and your family online."

For those interested in supporting this work, Parasol Cooperative accepts donations through their website – and as Megs emphasizes, every penny goes directly to making these tools freely accessible to those who need them.

In a conference full of startups promising to change the world, I found one that might – not through grand pronouncements about artificial general intelligence, but through the simple act of helping people feel safe in an increasingly complex digital world.

That simple act is likely worth more than a unicorn.

Especially to those, it helps.