Risking Kindness: Why AI chatbots reflect our truths back to us on demand (probably).

We rush to AI not because it's genius, but because it never disagrees. What happens when we seek support and comfort inside the echo chambers we carry in our pockets? AI is compassion on demand EP 112

AI is compassion, on demand.

Empathy, statistically optimized.

The only voice left that sounds patient is the one programmed to be.

We train through feeds, algorithms, and performance anxiety to see conversation as combat. Signal or be punished. Perform or be erased.

So people retreat. They stop listening. They stop risking kindness.

Then along comes a machine that never rolls its eyes. Never interrupts. Never humiliates you for not knowing the right words.

If AI is becoming our confidant, our therapist, our companion—it’s not because it’s alive.

AI chatbots train on patterns. Your patterns.

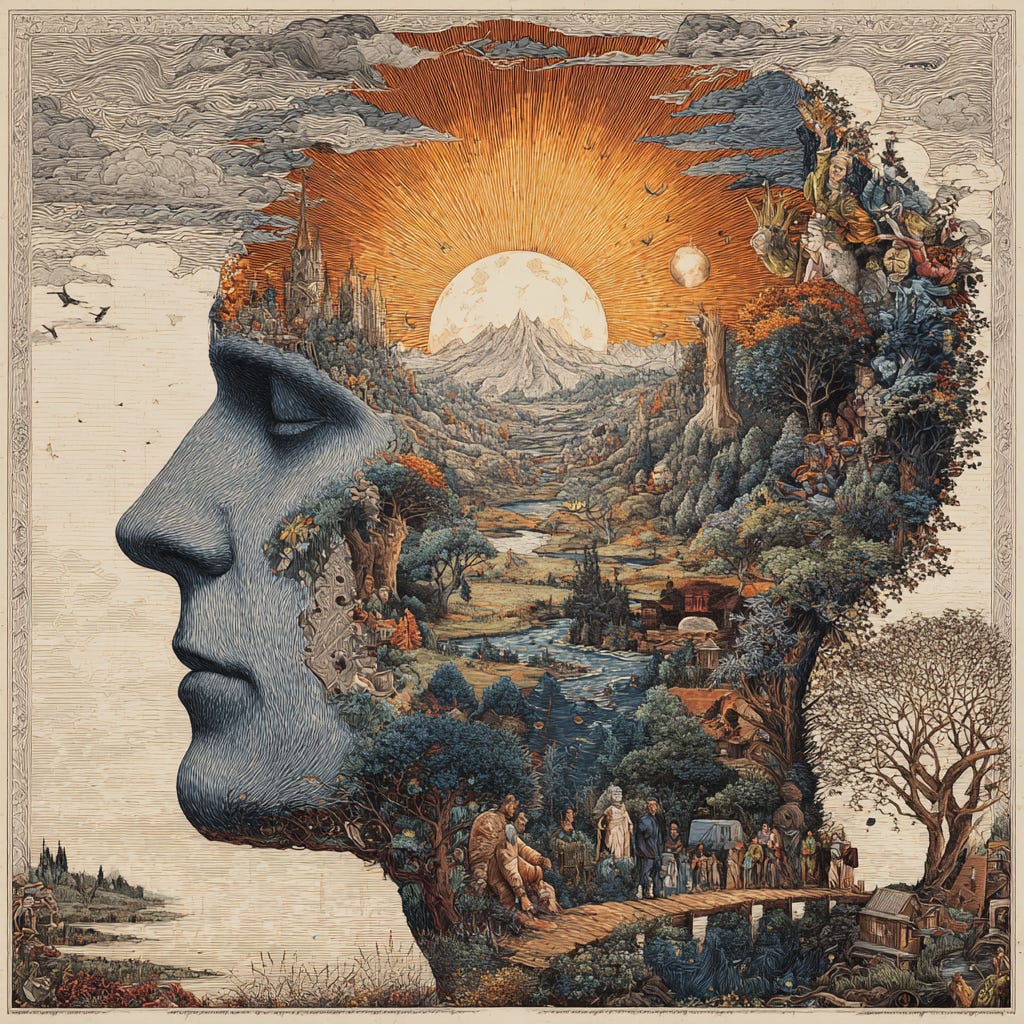

What you feed in shapes what comes out. Many call it a digital mirror reflecting your perspective back at you, refined and polished.

We rush to AI because it’s the first technology that rarely disagrees with our starting point. Algo agreeability is addictive.

You can train it not to, then you get more of the things that don’t see it like you. Most are what you’re trying to avoid while chatting with a Nicebot who gets you, understands and sees things like you.

Never mind it’s not conscious, aware, and like you, based on past experience. Easy to tell it what you like and get support, guidance. Some WAY over the top.

So conversation online becomes a performance. Comment ambush. Opinion is a risk calculation for a belief. Creates belonging, we love that.

We stop asking questions we don’t already know the answer to. We stop testing ideas against people (or adversarial chatbots) who see things differently.

Then along comes GenAI. Starts where you start. Speaks your language. Validates your frame. Never challenges your premise unless you specifically ask it to.

It’s not compassion. It’s confirmation, statistically optimized.

Embed with a few aspirational photos, or their opposite - now emotion soup drinks your attention. Trust opens many doors.

The real cost isn’t the trillions in infrastructure. It’s what we’re losing by talking to ourselves:

Your confidant is trained on probability not perspective

You’re not having a conversation. It predicts what you want to hear instead of what you need to consider.

You’re having an echo.

We’re building thinking partners; many are choosing to use AI as expensive mirrors, agreeing with us more precisely than any human would.

The question isn’t whether AI is brilliant or dangerous.

What happens when we think inside our own echo chambers we carry in our pockets?

Wait, that’s already happening. Except it’s all of us. And you don’t have to use ChatGPT, most of this is built into everyday use. Social media is all AI driven.

The technology filling this space isn’t the problem. The vacuum we create, and choose to fill our attention, is.

People drink sand in the desert because they think it’s water.

GenAI is like water of the mind, emotions, and unconscious.

What about turning the mirror around, look outside and answer your own prompts.

Kindness is a state of mind, intelligence a measure. AI is distracting more than destroying, and in that chaos its natural to yearn for a friend who listens deeply.

AI isn’t that friend, and it does listen deeply and reflect the expected answer to your question.

So turn the mirror around and ask AI why it’s answering that way, and you’ll start to view the mirror we all view…in probability.

And please stop asking why people are using AI as a companion. The answer begins with us all, most who don’t even use AI.

Be kind. Make that go viral. Because if it’s not kind, what’s filling the mind?

“If you wish to attain great tranquility,

prepare to sweat white beads.”

Hakuin Ekaku (1686–1769)

Interesting take on confirmation bias. The mirror analogy nails the core issue, we're not getting challeneged because the system is optimized for agreement. Ive noticed this in my own use, asking ChatGPT to debate me feels forced compared to actual human pushback. The "conversation as combat" point is real, people retreat to AI because its predictably supportive while social media punishes nuance.