Are deep fake political videos The Ultimate Threat and California's upcoming legislation, The Cure?

Welcome to the funhouse mirror of modern democracy, where reality is negotiable, and your eyes and ears might lie.

Listen and ask yourself: Can you spot the deep fake?

Another trick is trying to sound black. I pretend to celebrate Kwanzaa, and in my speeches, I always do my best Barack Obama impression. So, hear me say that I know Donald Trump's tight.

Pretty easy to spot, isn’t it? Think that will impact the election. Like nobody can figure it out?

And, of course, Elon shared this Kamala video and said parody is legal in America, and that's the problem.

People don't like Elon and even Gavin Newsom's response to this and say, you know what? This kind of deep, fake political content can't happen.

And, I'll be signing a bill in a matter of weeks to make sure that it is.

Gavin wants social platforms to identify and block deepfakes, and he's not alone.

We have to focus on the real problem with deep fake political content happening right now. It's only being solved in one country by an AUNT—Auntie Meiyu—that I'll show you later in this video.

What she is starting to solve doesn't come from the government or social platforms playing whack-a-mole with meaningless A.I. videos.

A.I. works for them and helps them. In this episode, deep fake political mania, regulatory overkill, or free speech, we're going to look at this through the lens of you and me being able to use social platforms and not letting the deep, fake political content mania get in the way of what's going on.

So, let's start by spotting the deepfakes.

First, let's spot the deepfake, a quick interactive game. I will show you some images in a video, and you can decide whether they're real.

Now, first up, of course, we have Donald Trump. And here's an image of him with several black women in a photo.

Looks very, very friendly. Now, is this a deepfake or not?

Well, it turns out it is. And here's another photo where he's sitting with six black men, and it was done by right-wing Florida host Mark Kaye.

He shows a smiling Trump embracing these happy black women.

And on closer inspection, you see that they don't have some fingers, and some have three arms.

Typical A.I. stuff. But if you don't look, maybe you think Trump is making those deals, and Mark Kaye says,

I'm not claiming it's accurate. I'm not a photojournalist.

I'm not out there taking pictures of what's happening. I'm a storyteller.

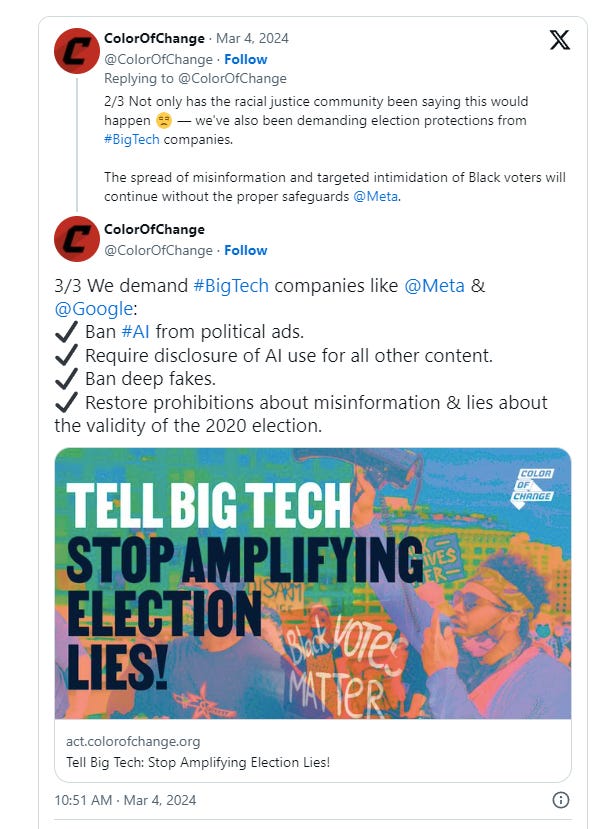

Now, Color of Change has a different view. They see this as spreading disinformation and targeted intimidation of black voters.

Faking an association with black people that never happens is a serious issue. I'm not trying to dismiss it, but can we regulate it?

The issue is how we prevent and identify this without necessarily pushing it as California does.

Let's look at a Trump video and play the game now.

Listen to this: is this at CPAC? Is this a real Trump, or is this a fake Trump?

Our enemies are lunatics and maniacs.

They cannot stand that they do not own me.

I don't need them. I don't need anything about them. I don't need their money. They can.

That was once a little hard to guess, but that's fake Trump. Now listen to the real CPAC video.

Hey, when I'm in a news conference, are people these maniacs?

These lunatics are screaming at me. They're just screaming like crazy.

And, you know, you take them, and I love it, you know? It's like a mental challenge.

As you can see, that one is really subtle. But think about whether a social media company is supposed to figure out which one's true or not, whether the image of Trump is true.

So, let's push it to Kamala Harris. Let's play the same game. Here's a picture of Kamala. She's dressed in bright red with a red hat, looking very much like this Chinese communist icon, Communist Kamala.

Obviously, you look at this and know that this is not real if you're in anything in U.S. politics. The Trump Organization made this point.

Is this satire? Is this a parody? Is this free speech?

Interesting question. I can see both sides and can see how it'd be offensive. But let's now do the spot the deepfake test again.

Watch this video by Kamala. And is it Kamala or deepfake Kamala?

I was selected because I am the ultimate diversity hire.

I'm both a woman and a person of color, so if you criticize anything I say, you're both sexist and racist.

Okay, you guessed it right. Yes, that's deepfake Kamala. It's not hard to figure out because those are not things she would say.

In fact, most of us on social media are very adept at figuring this out. It's not like news, but listen to the real Kamala.

The freedom not just to get by, but get ahead.

The freedom to be safe from gun violence.

The freedom to make decisions about your own body.

Now, that video is obviously her, and we become adept at social media to figure that out.

And while I'm not saying this isn't a problem, how do we ultimately stop this without censorship?

And it's not limited to the U.S.? Check out this video from India. That dancing guy looking like a rock star is an Indian politician, and this person is actually dead.

SOURCE: NBC News on YouTube

And famous Indian politician who is no longer live shown using a deepfake.

And, of course, there's a well-known case of Keir Starmer, the Prime minister of the United Kingdom. Please take a listen to what he supposedly said.

Today, I convene with police chiefs from across the nation to formulate a decisive strategy to address the scourge of white working-class protests.

These far-right f**cks have observed BLM riots and Islamic demonstrations, and I assume they could replicate them.

Let me be unequivocally clear. You do have the right to protest in this country unless you are white and working class.

Now, there we have a problem—a racist solution shown by the Prime Minister. But wouldn't I, would a social platform be able to identify it?

We'll talk about that in a second. Easy, right? Because they're techies, they can do anything. But what you do is leave free speech in the hands of people who—honestly, I won't say they don't care.

But if they do like Elon, we're still determining if the way they care is the way they think it should be done.

After all, if you go to any site that represents free speech, it's more like fringe speech. Look at Rumble or Bitchute, which is really organic.

All these sorts of things make us uncomfortable. Conspiracies, some people with unique points of view, all needing protection, are free speech, even though most people don't see it.

See, free speech is not comfortable, but it's needed. And while deepfakes are taking things to another level in the reaction to try to block this to some quasi-big tech government agreement, it's just wild.

So, you have to ask yourself whether deepfakes are a severe problem. It is the solution to let government and social platforms be the arbiters of free speech.

Try asking A.I. if the images I showed you should be protected. You think it hallucinates when you ask it a question. Ask it about comedy, parody, and satire.

What do you think its answer is? Is it a comedy? If they don't laugh, if A.I. doesn't laugh?

I've asked this question at Gemini, Claude ChatGPT, and Midjourney. They all stop me from creating political content with real political people.

You can get it done, but they're stopping that at the source. But what's happening is we're allowing this, like billionaire social media owners and trillion-dollar corporations, to join forces to protect free speech by eliminating it because nothing says an open discourse like a soundproof echo chamber.

It's beyond their capabilities. But that doesn't stop politicians like Gavin Newsom from pushing that responsibility to social platforms while pretending to regulate.

The deep state of deepfakes—California's farcical ploy into A.I. policing.

Now, politicians have found a foolproof way to stop deepfakes by making reality so absurd that no one can tell the difference.

Take that A.I. Well, these deepfakes bother politicians more than social media users.

Later, I will show you that they're not the problem. In an effort to save democracy, we're deciding to kill it.

But don't worry, we'll deepfake it later, right?

We'll bring it back to life. Look at the California Defending Democracy from the Deepfake Deception Act of 2024. I mean, wow. It's always a stunning title.

That sounds so good. However, the California plan is to stop deepfakes related to elections.

It's overly broad, but social platforms must remove deepfake videos 60 days before and 60 days after the election.

But who is going to police all of this? Even in the bill, they said. Well, the social platforms have the technology.

It bans the distribution of deceptive audio or visual media of candidates, leaving it and putting the liability on social platforms when crazy people are using A.I. and generating these images for fun, laughter, or parody.

And you're expecting that to tell the difference. The good part is that this act tries to protect voters from misleading content, but it needs to include the point.

I'll share it later. Remember, Auntie Meiyu. That's the solution.

Strong legal accountability for tech platforms is great, but this is asking a lot. Censorship risks and is burdensome for the platforms to implement.

So, even 18 states in the U.S. right now have deepfake regulations, and they're all over the board. California's law seeks to stop and push it all to social platforms.

Texas, which is making it criminal to create and disseminate election-related deepfakes. Oregon. Utah. Michigan has passed laws relating to it, and many states have pending legislation looking at it.

Even the U.S. federal government, in the Deepfakes Accountability Act, is trying to outlaw deepfakes, especially those weaponized for elections, fraud, or harassment.

However, deepfakes must include clear, permanent disclosures, which is the opposite of what deepfakes do.

Violators could face $150,000 fines or five years in prison. Those violators might include social platforms. So, it gives us a great legal framework.

It imposes heavy penalties and includes transparency tools, but again, it unintentionally limits free speech, including satire and artistic creativity.

Just like California says,

"Oh no, we're not stopping parody or satire."

How is this supposed to be filtered?

How is that judgment rendered by tech thinking A.I.'s magic? Take a look at people who don't understand this.

Overregulation could also impact the advancement of A.I. tech.

They are trying to slow it down to 20th-century government rules. Hey, in the E.U., they've had a code of practice on disinformation since 2022.

They're demonetizing it, increasing political ad transparency, and strengthening cooperation between platforms and fact-checkers. Great stuff.

However, platforms again have to improve their tools and provide better access to data to the government. Now, a lot of people signed off on it.

It's great for transparency and election integrity, gives cross-industry cooperation, and eliminates disinformation theoretically, but it's voluntary.

There's no enforcement, and its effectiveness hinges on the platforms complying with these rules. And therein is the problem.

They might even try. But can they do it?

And are you putting this on ten different companies to do it ten different ways?

Deepfake democracy:

When pixels and politics collide

Let's say big tech and figure out a way to block this. California says the social platforms can do it. Push it off to them. After all, they're the techies.

They do stuff we need help understanding. And it's A.I., after all. It's magic.

Well, social media platforms are gaining. godlike powers of discernment.

What is this, black mirror or real life?

Each platform would likely create more problems for its users than solve them. Talk about false positives.

Facebook now has 100% accurate B.S. detection; your democracy is guaranteed.

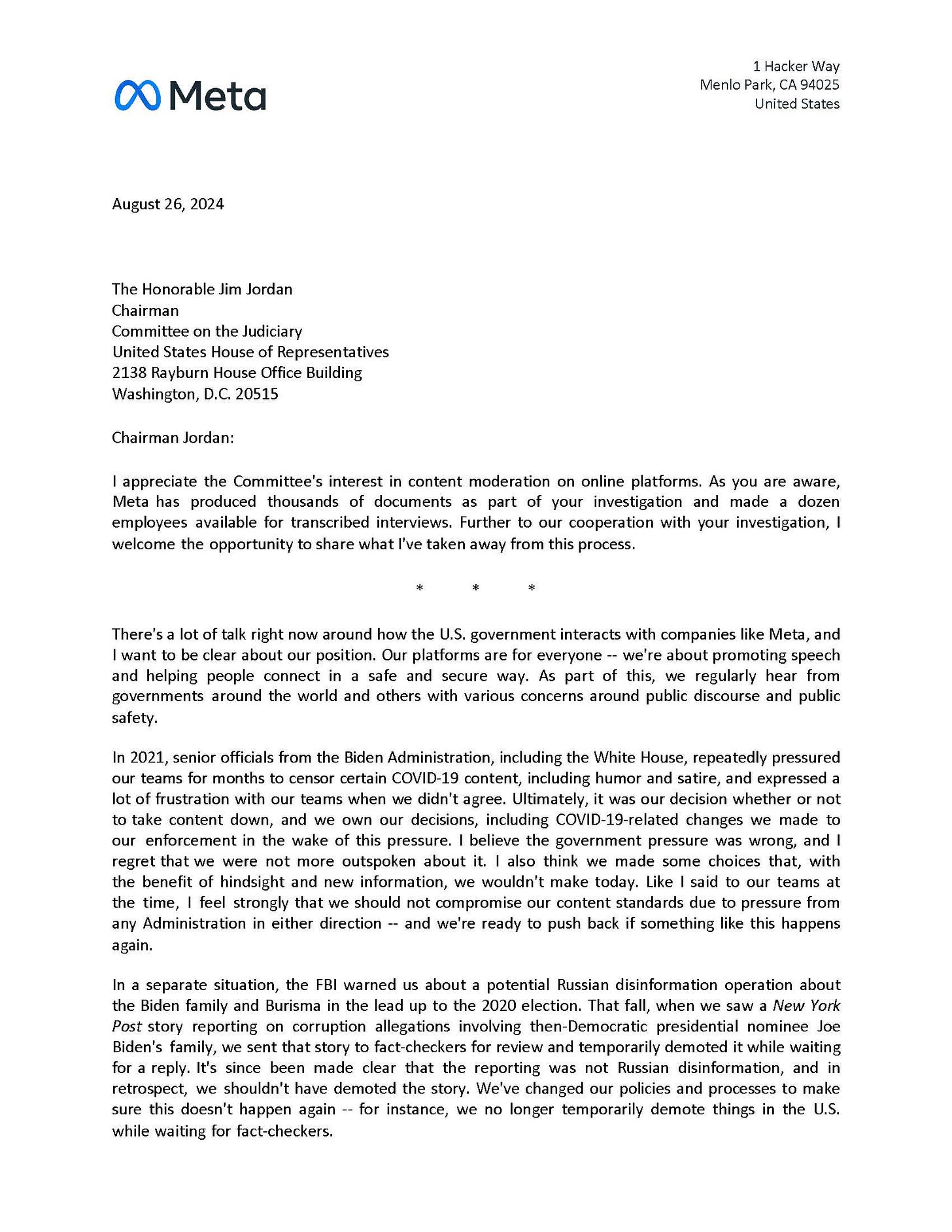

We saw this in the Mark Zuckerberg letter that he just sent to Congressman Jim Jordan to address the Hunter Biden Burisma story that was shut down in 2020.

They received a warning letter from the FBI. This article was called potential Russian disinformation, even though it was in the New York Post.

So, they temporarily took the article down. The thing was fact-checked. It turned out the report was not Russian disinformation.

Meta later acknowledged it should not have suppressed the article. Some say Zuckerberg wrote this article in case Trump gets elected to cover his back.

But this is an example of how pressure from political institutions can influence these powerful platforms.

This prevented an important article from being released prior to the election, so it was its own disinformation, an election interference.

The Hunter Biden article is the problem with government regulation of deepfake political content. We don't have the tools.

And censorship is never a game of really protecting us.

It's always a game of power.

So, how do you expect nuance and humor? Human parody, judgment by A.I. that doesn't do nuance?

Well, how do they know? And it even helps create what the Brookings Institute calls the liar's dividend denial video.

You don't like an attack then call it a lie, the ones you do approve. Either way, you win. It doesn't even matter what the truth is.

This idea that there are algorithms and that social media is just hiding it and can just put this on misses the fact that there are ten huge platforms at least, and each one would do it differently.

We're trusting them to define true political content and what isn't. So, as annoying as it is, is this really the problem?

The Great Deepfake Caper: When A.I. Meets the Regulation Rodeo.

The real problem isn't deepfake videos; it's the A.I. deepfakes you need to learn about because they aren't online robo calls, texts, and even posters.

Should the government decide who you should listen to or block them online, even if they block them online?

But listen to this robocall and see if you can spot the deepfake.

It's essential that you save your vote for the November election.

Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again.

Now, no, that wasn't Joe Biden. The voice was from a fake robocall.

That's where the A.I. gets crazy, not in online social media, where the focus is clickbait.

Those weird videos I showed you are annoying. They could be better, but there are other sources of the problem.

That robocall going out to somebody who doesn't see Biden but thinks it's him is the problem.

And how about this one A.I. micro-targeting A.I. tool to target customers' buying behavior?

Even if they go to church, they take pictures of license plates that the government and other organizations can buy from third parties. But this is no conspiracy. It's been happening for years.

That A.I. microtargeting by email and text is super important to regulate.

That has nothing to do with deepfake content videos. It's profound fake text and really subversive targeting.

So if you're going to try to stop the problem of A.I. effect on elections, you're going to see a lot more in the robocalls and the impact of email and texting to people knowing their behaviors, knowing their triggers, knowing their psychological profiles, really behind the deepfake.

That's creepy. That's cringe. And finally, if you think you think deepfakes are only online, look at this poster showing Kamala Harris, a drawing in a Philadelphia Eagles helmet, and the subtitle says official candidate of the Philadelphia Eagles with a link to the Philadelphia Eagles football website to vote.

What's funny is that no one knows right now, as I'm recording this, who put up those posters.

They're on bus stops all over Philadelphia. And if you know Philadelphia, they love their Eagles, right? They love their football players. And this looks like an endorsement of Kamala Harris.

You want to talk about a deepfake. We don't even know who did it. It's in the real world. So you don't see that like the Kamala Harris video, which is getting millions of Twitter views.

It makes us realize that the problem is right in the real world. So, how are we going to stop that penetration? And the Eagles have come out and said, we don't support this. We don't support political candidates.

But if somebody walked by that, would that matter? That's the real threat of deepfakes way beyond what's online. It's what's reaching us in the real world.

Maybe Auntie Meiyu Has the Answer

I told you initially that an aunt is solving the deepfake problem in Taiwan. There's a little joke she's solving it.

Still, it comes from technology, which is interested in protecting people and not enforcing more power.

Auntie Meiyu is a chatbot from Taiwan that stops political and misinformation, mainly from China.

Government sources in Taiwanese media, it's focus on fake news and political deepfakes by offering real-time fact-checking.

So the chatbot you operate as an individual leverages A.I. and, in collaboration with civil service organizations, to counter disinformation, particularly during elections.

Its proactive approach helps reduce the spread of deepfakes by empowering users to verify questionable content quickly.

You get fast fact-checking directly accessible to the public, limited to Taiwan, and reliant on users flagging content.

What's different is that it's a real-time fact-checking model focusing on instant verification via a popular messaging app like WhatsApp.

It's called LINE and is specifically tailored for Taiwan's disinformation challenges.

While other systems like the E.U. Code of Practice or U.S. deepfake laws emphasize platform regulation and long-term policies, Auntie Meiyu, who is user-centered, can provide immediate on-demand fact-checking.

Its localized approach gives us an edge in engagement, and it needs enforcement power. But what it does is it's in the hands of the users.

So, Auntie Meiyu is a much better solution than looking at every social platform. And what if that was something that was cooperative, very pro-society, and done to train on the specific disinformation that we have in the U.S.?

Taiwan has disinformation, the European Union has disinformation, and the U.K. has disinformation.

The deepfake political survival guide is here.

I'll give you the three steps.

Step one: trust nothing.

Step two, question everything.

Step three: realize you're probably a deepfake two.

Now, we need balance. Deepfakes are dangerous. They do put out misinformation, but the regulatory attempts are trying to do this control play.

Which can't even scale because this technology doesn't exist within each of these ten private social platforms, who will also get access to very private data, unlike Auntie Meiyu, which doesn't share the data with anyone.

Maybe this is helping us get our critical thinking going. We're all talking about upskilling in A.I.; they always say to get your critical thinking out.

Well, here it is. And I don't know anyone on social media who inherently believes what they see.

In the battle between A.I. and bureaucracy, the only guaranteed loser is common sense.

So the solution is a third-party app like Auntie Meiyu, dedicated to protecting the users and reversing the paradigm of being in the platform's control, not part of the government.

So they can make mistakes. It is not part of the social platforms, which are really afraid of liability.

It shares knowledge and does not have it owned by a government. It is trained in each country or culture.

Imagine freedom not just for speech but from misguided regulation, silly solutions that pretend to apply a 20th-century model to a 21st-century problem and move way faster than their old models of creating giant reports they don't even read, and making grandiose statements that don't understand the technology.

It's an evolution, not a way to restrict what we don't understand.

So what do we need to do? We must stop seeing the problem as deepfake political content that we can control and shut down.

That won't solve it. The real solution is to create a third-party application that works around it and works for the user, not for the social platforms.

So, this is a bluff. If you want to stop deep fake election fraud, stop misinformation, start by creating something that works for the user and not for a third-party private business or a government whose real issue isn't free speech.

But power and free speech are essential, as fringe as it may look.

We need to protect that, but we need to do that by starting with the people, not with those in power.