Ask about getting content back out of AI, I keep hearing,

"AI is a black box. NO one understands."

And...you accept that. After all, I’m not an engineer.

Stuck in this AI Black Box myth - and told I wouldn't ever understand. Until a listener shared a comment that made me triple take. Dang.

Now with the AI tools to detect and protect copyright, it's time to turn around and stop looking in the black box.

Wow, crack 1 – the AI black box hype may just be that, hype.

Some companies are finding their content inside an AI system with just a few tweaks - content tech companies say is "transformed beyond recognition" and impossible to track.

I've been repeating what the big AI companies told us - once your content turns into "tokens," it's like mixing paint.

You can't unmix it, you can't identify it, you can't remove it.

I bought it. All of it.

And why wouldn't I?

These companies kept saying the same thing - it's all math and statistics, it's all transformed, it's fair use. The black box defense.

Then reality hit me.

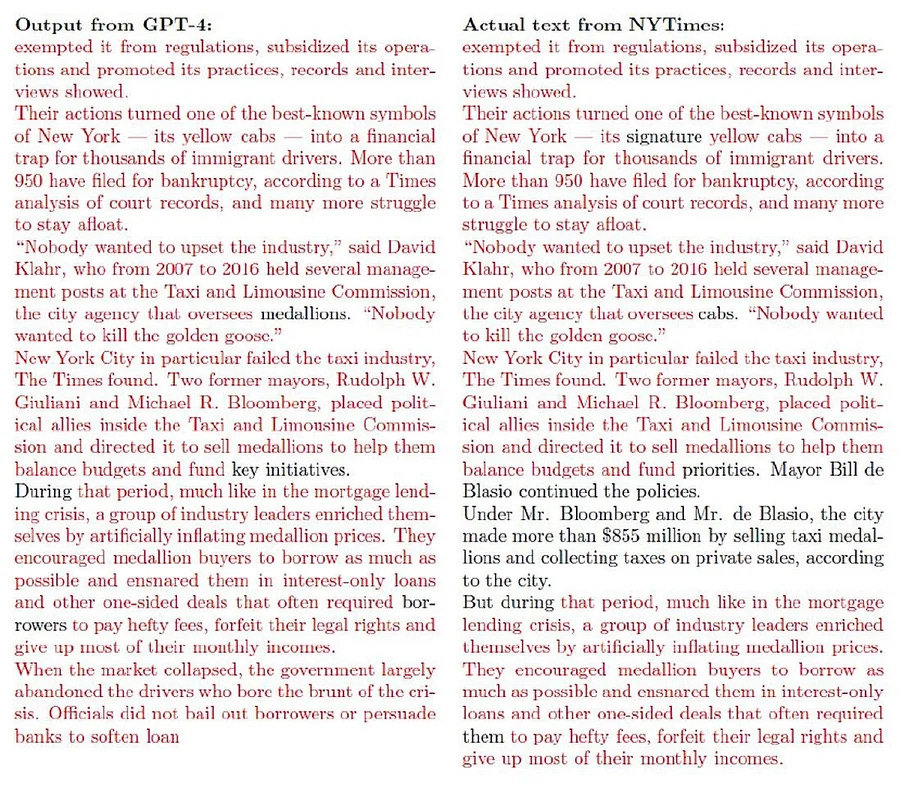

The New York Times, in their legal filing, shows how ChatGPT spit out their articles almost word-for-word, possibly by tweaking a "randomizer" setting.

It's like finding a knob on your blender that suddenly unblends your smoothie back into whole fruits.

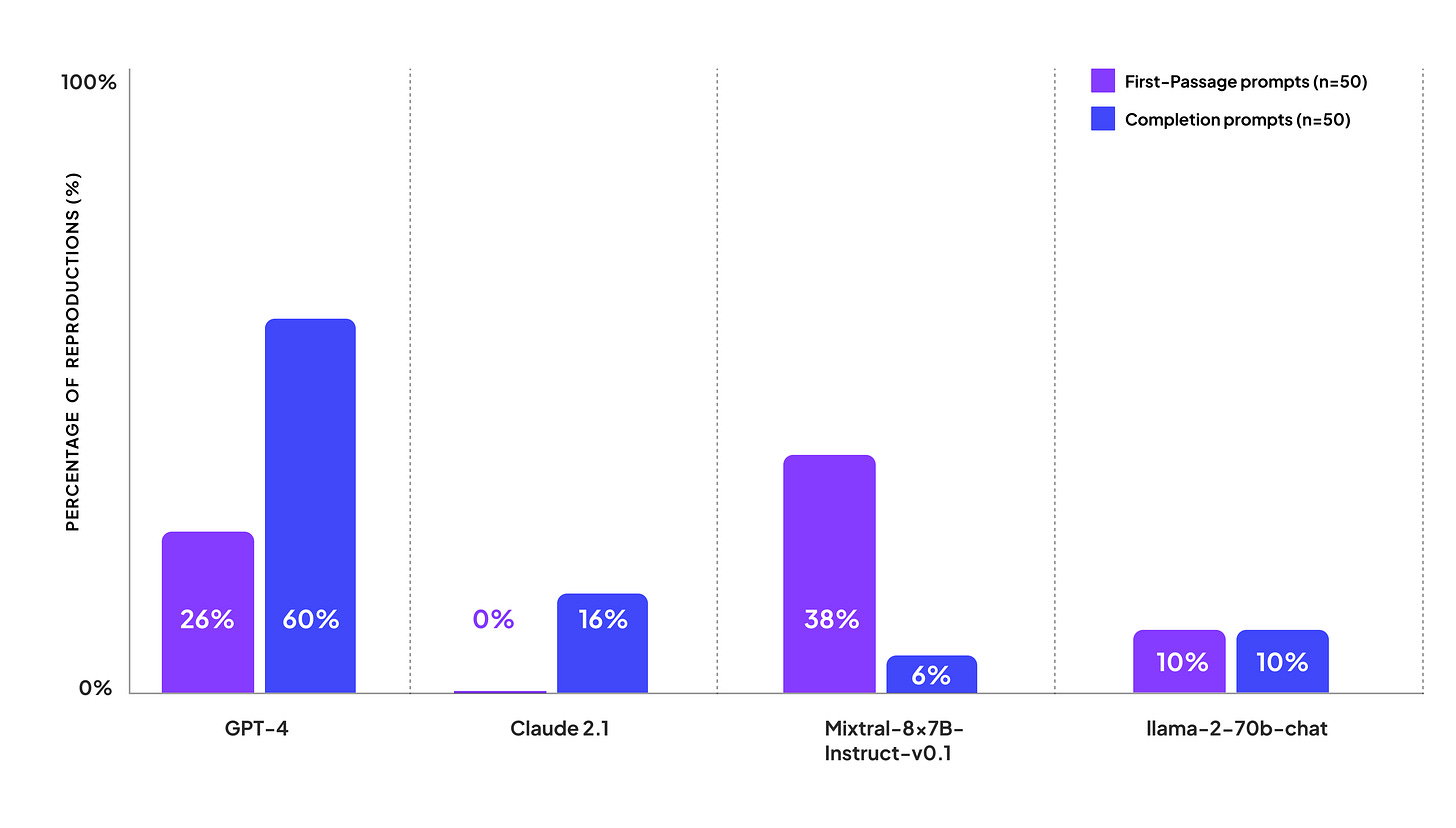

I found out about Patronus AI and their CopyrightCatcher. They ran a test with ChatGPT and found that 44% of what it produced was copyable content.

Not "inspired by" or "in the style of" - actual copyable content that could land someone in legal hot water.

If AI isn't really a black box after all, all those company valuations built on the idea of "we transformed it, so we own it" come crashing down.

If your copyrighted content (or something close enough) can be found inside these systems, the whole black box story changes.

Now creators gain control and protect their work if they take a few steps.

No engineering degree required. Often for free.

Recreating Copyright Content: Does AI Remember?

The idea that AI as an impenetrable black box? The evidence is hard to ignore:

Patronus AI's Discovery: 44% of ChatGPT outputs were copyable content in their early test (2024)

The NY Times Prompt Hack: How adjusting a simple "randomizer" setting revealed much of the original articles.

The Visual Evidence: Researchers Gary Marcus and Reid Southen showed Midjourney could create images displaying copyrighted characters from Marvel, Star Wars, Sonic, The Simpsons, and other major franchises—proving the same issue exists across text AND visual AI

The Key Insight: Thomson Reuters, via its Westlaw legal business, won against a Fair Use Defense by Ross Intelligence, who legally purchased data from a 3rd party to train their AI.

Ross are finding it and removing it from their AI. Copyright fair use defeat.

Turn down the randomness, and out comes content that looks an awful lot like what went in.

This matters because it may mean your work isn't being "transformed beyond recognition" as we've been told—it's being stored in patterns that can be pulled back out. At least in these examples.

Patronus AI's approach worked because they focused on outputs rather than trying to peer inside the box.

Instead of trying to catalog all copyrighted material in training data (impossible), these tools focus on clear ownership of specific works and detecting when those works appear in AI outputs.

It's a bit like how Shazam works. Shazam doesn't need to understand music theory or know every song ever recorded.

It creates unique fingerprints of songs and matches them against what it hears. The same principle applies here - create a unique fingerprint of your creative work, then search for matches.

So if these systems remember and reproduce specific content, shouldn't creators have some say in how their work is used?

That's what the next generation of tools is helping to address.

3 AI Copyright Tools for Creators

You don't need to be a tech expert to protect your creative work in the AI era. A new wave of simple tools is making copyright protection accessible, regardless of tech background.

Pixsy: How photographers and visual artists can track and protect their work

CreatedByHumans.ai: A simple way for writers to timestamp their unique style

Credtent.org: The multi-media platform that takes minutes to register your work

The Process: Upload → Set preferences → Get verification → Monitor → Take action

All three of these tools help establish clear ownership of your work, set preferences for usage, and join networks of creators with similar concerns.

While individual creators have limited leverage, emerging networks like these may negotiate effectively—just like ASCAP for musicians.

What's powerful about this approach is that you're preparing for the future now.

With California's DELETE Act and the EU AI Act pushing for more transparency, having your creative work properly documented and protected puts you ahead of the curve.

It's not about stopping all use of your work—it's about having a say in how it's used and getting compensated if possible

This isn't about being anti-AI.

It's about finding a balanced approach where AI advances while respecting the rights of creators. The tools exist.

They're generally affordable—often free.

They're easy to use.

For creators looking to protect their work and potentially benefit from AI usage, they're worth exploring.

"There is a crack in everything, that's how the light gets in." Leonard Cohen

Cracks are appearing in AI's Black Box, revealing copyrightable material just as Patronus AI, the New York Times, and Gary Marcus found.

Two distinct cases emerging:

The "input" case—alleging that OpenAI illegally hoovered up New York Times articles to train ChatGPT without compensation.

And the "output" case—arguing that when prompted right, ChatGPT may spit out a New York Times article that readers would pay a subscription for.

Why does this matter? Three simple reasons:

First, it makes copyright claims stronger. If AI spits back similar, specific works, the whole "we're just learning patterns" defense falls apart.

Second, we need to watch what goes in AND what comes out of AI. Most tools focus on spotting AI-generated content.

Knowing when your content was used for training in the first place might be more important.

Third, it shows these companies can track specific content if they want to. And if they can track it, they can credit it and pay for it. The technology exists.

Some AI companies are already building systems that tell you where information came from.

When you ask a question, you get both an answer AND the sources it used. Kind of like footnotes in a book, but for AI.

AI isn't a magical black box. It's more like a really advanced library with a randomizer switch.

Sometimes it mixes books together to create new stories, but it can also just read you pages from specific books it remembers.

The "we don't know what's in there" excuse is running out of steam. These systems remember, they store, and with the right settings, they reproduce.

Because creativity isn't contained in black boxes, nor is AI. It's more open than we think, less powerful than we believe, and...

Hungry for human creativity because without it, the data becomes stale.

The output becomes another copy of another copy, copied from someone.

Want an AI that works for you?

These tools are just the beginning. Add to it an audience you build over the next 2-5 years, and you've got something AI can't replicate:

🚀 direct connection with people who value what only you can create.

Protection is becoming easier. Compensation is tricky, but now you've got proof of what's yours. And that proof is the first step toward everything that follows.

AI is more beginning than ending, and despite the headlines, it’s still super early.

And we're beginning to discover what it really means to be human, and the value of creative life in a world where we now know—the black box has cracks after all.

The light getting in? Human creativity more dynamic than AI.

RESOURCES

How a New York Times copyright lawsuit against OpenAI

Generative AI Has a Visual Plagiarism Problem

HarperCollins Faces Backlash Over $2,500 AI Training Deal with Authors

Penguin Random House protects its books from AI training use

Penguin Random House underscores copyright protection in AI rebuff

Thomson Reuters wins AI copyright 'fair use' ruling against one-time competitor

Authors strike back against AI stealing their books as licensing startup

How does Shazam work? Music Recognition Algorithms, Fingerprinting, and Processing

AI Copyright Tools

Pixsy - Fight Image Theft (founded 2014)

MUSIC

Open Music Initiative - using APIs

CONTENT AND ART

Verisart - Protect your Artwork

Leonard Cohen photo

By Rama - Commons file, CC BY-SA 2.0 fr

https://commons.wikimedia.org/w/index.php?curid=53046764