"'I've always tried to understand the world underneath everything, because that's really what's going on,

We live on the surface.

And unfortunately, the culture that we live in is also absolutely obsessed with surface... But that isn't reality.'

What you're looking at is more powerful than any legal argument.

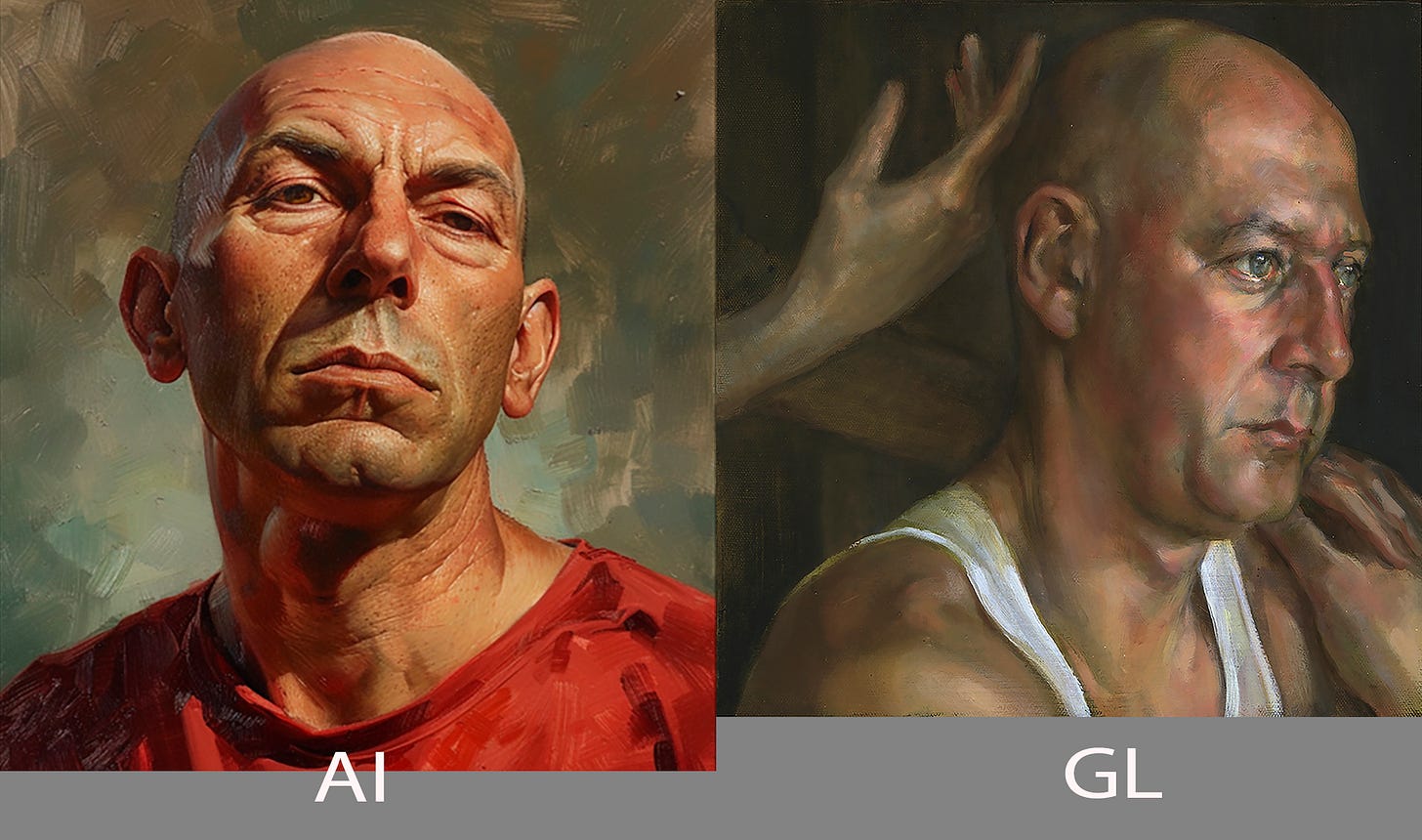

On the left, AI-generated "in the style of Geoffrey Laurence." On the right, an actual Geoffrey Laurence painting.

"On first glance you could say, oh yeah, that's a Geoffrey Laurence, but I would never do that in the background.

I would never make a background like that.

Look at the reflected light in the cheek and just below the ear.

Do you see the difference between that and what the machine has done on the left side of his face? How crude that is."

This visual difference shows what's at stake in these copyright cases:

It's not just the final image - it's the expression, the emotional resonance that Geoffrey describes as "the world underneath everything"

And now we begin with 3 Case Files, and First…

a Case without a file:

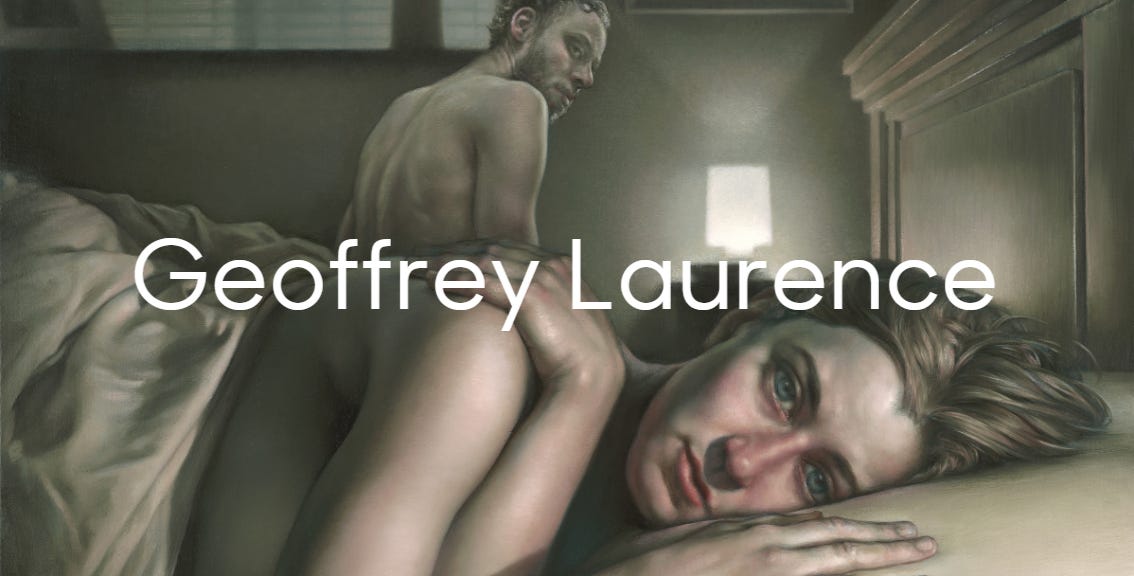

Case with no number, Geoffrey Laurence. Artist, 56 years. All his work sucked up by ChatGPT because he shared it on that flat surface called the Internet.

His work has been shown in museums worldwide yet to the AI bots, he’s just another pixel towards training that diffusion model.

Because AI is “learning”, all good to take whatever content they can scrape buy or borrow.

And it’s the opposite of what I've discovered investigating the three major AI copyright lawsuits.

The headlines focus on copied text, reproduced images, and legal technicalities.

But that's just the surface. We’ve covered those in detail, picking apart the real from the not so real.

Yeah they matter, but to a judge with no precedents and little real guidance, it comes down to one factor they look at, when they look beneath the surface….

Then Swen Werner with a comment suggests I read his Substack My Digital Truth® - Fusionistic

Asking me to look beneath the surface and shows me a Guy Fieri example, the one that went viral, and how it shows the dancing with the facts that happens in legal matters and with attorneys….

First glance you get angry, then you get curious. Then you take action.

Sitting still is not in the cards for creators.

When you follow the money trail like I have, these lawsuits tell a completely different story – one that has little to do with Geoffrey or the millions of other creators without powerful lawyers.

Today we're opening three case files that reveal what AI copyright lawsuits are REALLY about.

When we pull apart the legal word salad and find where the actual decisions will happen, you'll usually find it when you follow the money.

Beneath the surface reality of AI is a money trail telling a different story – one defining the future relationship between human creativity and artificial intelligence.

Case #1: Thomson Reuters vs ROSS Intelligence

Exhibit A: The Competitor's Dilemma

Let's open our first case file.

Thomson Reuters, owner of Westlaw, created a valuable legal research system with human-written headnotes summarizing key points of law.

This represents decades of expert legal services – their intellectual property.

ROSS Intelligence wanted to build a competing AI legal research tool. When Thomson Reuters refused to license their content (seeing ROSS as a competitor), ROSS didn't give up.

Instead, they hired LegalEase, a third-party research firm, to create 'Bulk Memos' containing legal questions and answers.

Essentially, ROSS had found a workaround to use Thomson Reuters' data after being explicitly denied permission.

ROSS bought more than the usual legal office would touch in a decade worth of legal memos all at once – 25,000 of them – creating an anomaly so large it couldn't be ignored.

Thomson Reuters didn't need to find their content in ROSS's output.

The court focused on the training data itself – the Bulk Memos used to teach the AI – finding that they resembled Thomson Reuters' original headnotes.

Follow the money trail:

This wasn't about protecting content – it was about protecting market position.

Thomson Reuters faced a direct competitor attempting to leverage their decades of investment without compensation.

That's what drove the decision, not abstract concepts about AI copying.

Why is this sending a chill through Silicon Valley?

Because copyright violations after AI had legally purchased data was found. On the input, which is the key to the AI industry which states it has a right to use this under fair use.

Fair use defense took a big hit in this one.

Case #2: NY Times vs OpenAI

Exhibit B: The Media Model Under Threat

Our second case file reveals something fascinating about the New York Times lawsuit against OpenAI.

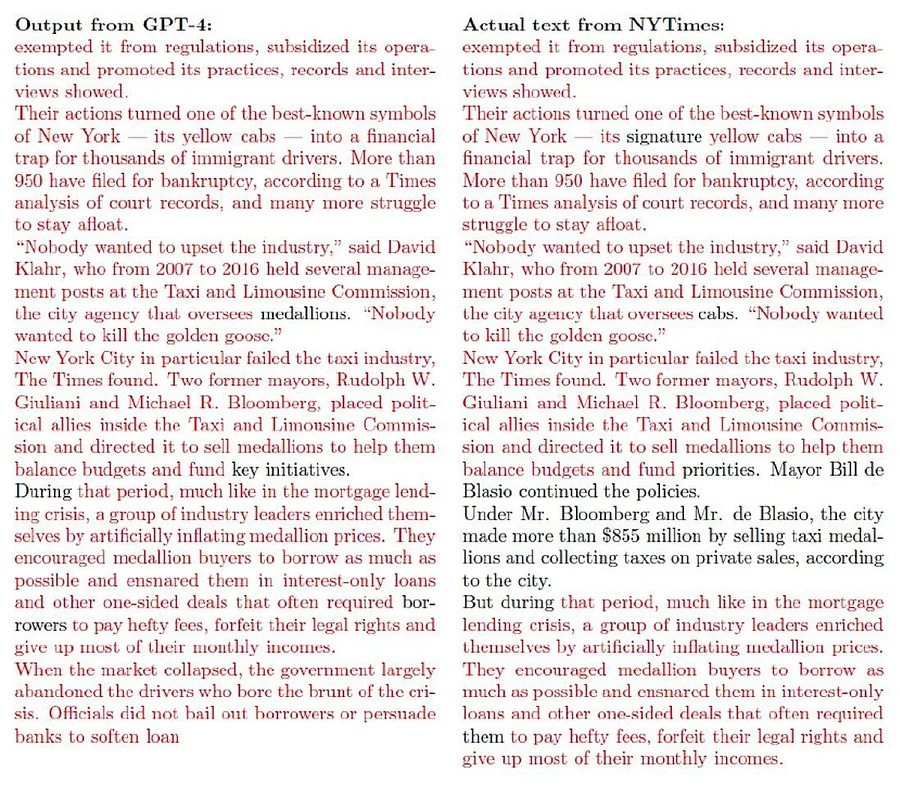

The Times claims ChatGPT is essentially stealing their journalism. Their smoking gun? When prompted, ChatGPT reproduced parts of a viral restaurant review about Guy Fieri.

But dig beneath the surface, and you'll find a different story.

As Swen Werner points out, there's a crucial detail the Times left out: ChatGPT only reproduced about 20% of the article – mostly the beginning that went viral across the internet.

What they didn't say was that it only recalled the most widely quoted parts that likely appeared on countless websites.

Is this evidence of direct copying or something else entirely?

The AI isn't 'storing' articles but recognizing text patterns that appear frequently online – similar to how you might recognize 'To be or not to be' without having memorized the entire play of Hamlet.

Follow the money trail:

This case isn't about copying snippets.

It's about the New York Times seeing AI potentially cannibalizing their subscription model by answering questions using information drawn from their journalism.

Their business model depends on being the exclusive source for their reporting – and AI threatens that exclusivity.

The New York Times lawsuit focuses on surface copying while the concern runs deeper: will people still pay for subscriptions in an AI-powered world?

Case #3: Getty Images vs Stability AI

Exhibit C: The Brand Value Heist

Our third case file might be the most visually compelling – and it crosses international borders in ways that dramatically impact the outcome.

Getty Images, a major stock photo provider, is in legal action against Stability AI, claiming they 'scraped' millions of Getty's copyrighted images without permission to train their Stable Diffusion AI model.

Unlike the other cases, Getty has visual evidence that's impossible to ignore – AI-generated images that accidentally reproduce Getty's distinctive watermarks.

It's like catching a forger who accidentally left the original artist's signature on their work.

This case is being tried in the UK, not the US. Stability AI claims the training didn't happen in the UK at all, but in the US, potentially putting it outside UK copyright jurisdiction.

The court acknowledged that a crucial question is the location of the training.

Follow the money trail:

While 11,383 images aren't that many in the grand scheme of AI training data, finding Getty's brand appearing throughout Stability's outputs reveals what's really at stake.

This isn't just about images – it's about Getty's brand value, worth billions, being used without compensation.

Stability argues that their AI doesn't 'memorize' images but learns patterns, creating new works rather than copying.

They even claim any similarity to Getty images comes from user prompts, not the underlying model.

When AI reproduces a Getty watermark, it reveals that the machine doesn't understand what it's copying – it's just pattern-matching without comprehension.

The economic impact is clear: Getty Images watches as AI tools generate images that could eliminate the need for stock photo licenses, potentially undermining their entire business model.

Buying AI Data with Copyright Diligence

These three lawsuits are reshaping how AI companies acquire and use data. The wild west era of "scrape everything" is ending as courts increasingly recognize that:

Training = Using: Using content to train AI, even without displaying it, can be infringement

Intent Matters: Building direct competitors with someone else's content faces greater scrutiny

Economic Impact Drives Decisions: Cases with clear business model impact have stronger claims

Now what do we do with the damage caused, and how does this work going forward?

We're watching three lawsuits that will determine whether AI thrives or stumbles in America - and I'm struck by how both sides are missing what's really at stake.

It's not about algorithms or legal precedents. It's about who gets to profit from human creativity in the age of AI.

When the New York Times sued OpenAI, they claimed ChatGPT was essentially stealing their journalism.

The Thomson Reuters case showed that even without visible output, training AI on copyrighted material may still be infringement when building a direct competitor.

A special kind of sneaky move by ROSS - after being denied a license, they bought 25,000 "Bulk Memos" through a third party to train their legal AI.

An agile AI startup meets a big company that watches it’s 3rd party data purchases.

The Getty Images case provides the most visible evidence - AI art generators that accidentally reproduced Getty watermarks.

But while others might have felt their work was taken by AI, Getty’s brand is what emerges, which makes that one even harder to figure out.

With their recent merger with Shutterstock in January 2025, the value of that company is about $3.5B US.

So all those Getty Images name and logo appearing, whether with an image or not, are more than copyright violations. They impact brand value by putting Getty’s name next to sometimes odd output.

Because like NY Times snippets, the reconstruction of that repetitive brand copyright image became a recognizable pattern to AI.

That’s more than content, that’s AI taking a brand by accident….which is why this case will be so hard to decide. The numbers here are huge.

Add onto the fact that Stability’s location makes copyright a question of which country, US or UK, but the Getty Images brand is worldwide.

When we look beneath the surface of these three case files, a clear picture emerges.

These lawsuits aren't ultimately about copying, or technical details of how AI works – they're about who profits from human creativity in the age of AI.

Thomson Reuters doesn't want a competitor using their legal analysis to build a competing product

The New York Times doesn't want AI undermining their subscription model

Getty Images doesn't want its brand value appropriated without compensation

These economic realities, not abstract legal principles, will likely determine the outcomes.

The Human Cost Both Sides Are Missing

AI engineers often see creators as entitled complainers who don't understand technology, clinging to outdated ways.

Creators see engineers as people who don't respect the thousands of hours it takes to develop creative skills. Or create.

Neither side is truly listening. And they’re not even dealing with the facts, just opinions on whether AI is copying or evil, and whether creators expect to get paid for things they don’t deserve to be paid for….

If engineers win outright, what motivation will people have to create when their work just becomes training material for Big Tech's trillion-dollar AI systems?

If creators win completely, a thriving industry gets derailed, potentially setting America back in the AI race.

The solutions will likely involve:

Fair compensation systems that recognize when AI benefits from creative work

Clear attribution when AI builds on specific sources

Opt-out mechanisms for creators who don't want to participate BEFORE content goes into AI

Licensing frameworks that make compliance straightforward

Whether you're a creator like Geoffrey Laurence worried about your livelihood or a business excited about AI's potential, these cases will impact your future.

The companies that will lead us forward aren't just those with the best technology, but those building financially sound data practices into their foundations.

These legal battles aren't the end of the story - they're the messy beginning.

"What I find myself in now is a world that is purely engaged in surface," Geoffrey Laurence told me. "I've always tried to understand the world underneath everything, because that's really what's going on."

This is the perfect metaphor for our AI moment.

The companies creating AI are building tools that excel at surface pattern recognition.

But Geoffrey's work reminds us what lies beneath – the human emotion, the 56 years of craft, the ability to capture something ineffable.

The difference between surface copying and deep understanding matters - not just in art, but in how we build our AI future.

That's the money trail worth following.

RESOURCES

The NYT’s AI Lawsuit Hinges on a Misleading Claim—And Nobody Noticed

Every AI Copyright Lawsuit in the US, Visualized

What is Third-Party Audience Data? Examples, Datasets and Providers

Getty Images, Shutterstock gear up for AI challenge with $3.7 billion merger